TL;DR

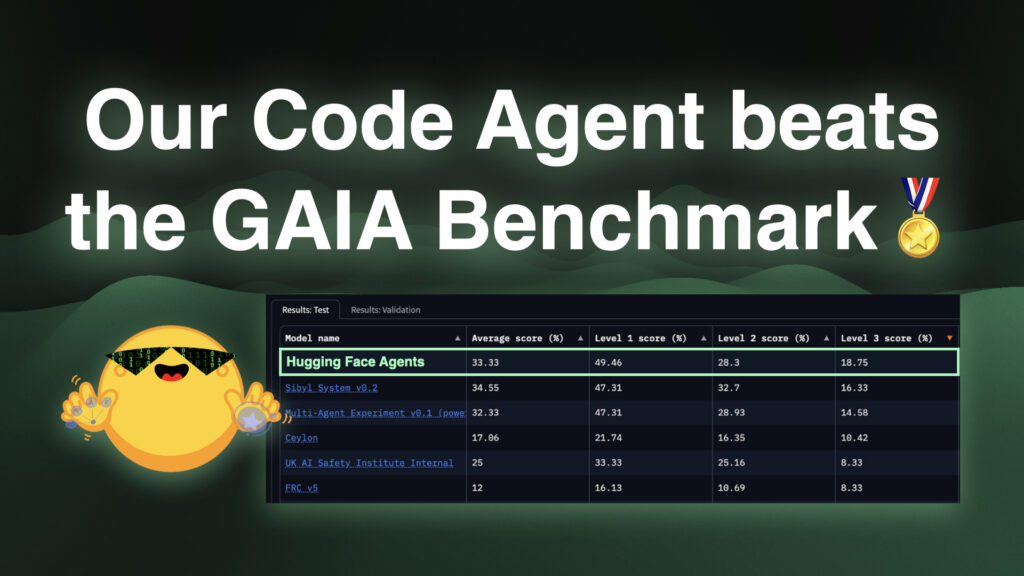

Experiments showed impressive performance of Transformers Agents to build agentic systems, leading to an investigation of its capabilities. A Code Agent built with the library was tested on the GAIA benchmark, arguably the most difficult and comprehensive agent benchmark, achieving a top position!

The framework transformers.agents has been upgraded to the stand-alone library smolagents. The two libraries have very similar APIs, making switching easy. The smolagents introduction blog is available here.

GAIA: a tough benchmark for Agents

What are agents?

Agents are systems based on Large Language Models (LLMs) that can invoke external tools as needed and iterate on subsequent steps based on the LLM’s output. These tools can range from a web search API to a Python interpreter.

Programs can be visualized as graphs with fixed structures. Agents, however, are systems where the LLM’s output dynamically alters the graph’s structure. An agent determines whether to call tool A, tool B, or no tool, and whether to proceed with another step, thereby modifying the graph’s flow. An LLM integrated into a fixed workflow, such as in LLM judge, would not constitute an agent system, as its output would not change the graph’s structure.

An illustration shows two different systems performing Retrieval Augmented Generation: a classical system with a fixed graph, and an agentic system where a loop in the graph can be repeated as needed.

Agent systems enhance LLM capabilities. Further details are available in an earlier blog post on the release of Transformers Agents 2.0.

GAIA stands as the most comprehensive benchmark for agents. Its questions are highly challenging, exposing specific difficulties inherent in LLM-based systems.

Here is an example of a tricky question:

Which of the fruits shown in the 2008 painting “Embroidery from Uzbekistan” were served as part of the October 1949 breakfast menu for the ocean liner that was later used as a floating prop for the film “The Last Voyage”? Give the items as a comma-separated list, ordering them in clockwise order based on their arrangement in the painting starting from the 12 o’clock position. Use the plural form of each fruit.

This question presents several difficulties:

- Answering in a constrained format.

- Multimodal abilities to read the fruits from the image

- Several informations to gather, some depending on the others:

- The fruits on the picture

- The identity of the ocean liner used as a floating prop for “The Last Voyage”

- The October 1949 breakfast menu for the above ocean liner

- The above forces the correct solving trajectory to use several chained steps.

Solving this requires both high-level planning abilities and rigorous execution, which are precisely two areas where LLMs struggle.

Consequently, it serves as an excellent test set for agent systems!

On GAIA’s public leaderboard, GPT-4-Turbo achieves less than 7% on average. The previous top submission was an Autogen-based solution utilizing a complex multi-agent system with OpenAI’s tool calling functions, reaching 40%.

Building the right tools 🛠️

Three main tools were employed to address GAIA questions:

a. Web browser

For web browsing, the Markdown web browser from the Autogen team’s submission was largely reused. It includes a Browser class that stores the current browser state and provides several tools for web navigation, such as visit_page, page_down or find_in_page. This tool returns markdown representations of the current viewport. While markdown compresses web page information significantly, potentially leading to some omissions compared to solutions like screenshots and vision models, the tool performed well overall without excessive complexity.

Note: A potential future improvement for this tool involves loading pages using the Selenium package instead of requests. This would enable the loading of JavaScript (many pages require JavaScript to load correctly) and the acceptance of cookies to access certain pages.

b. File inspector

Many GAIA questions involve attached files of various types, including .xls, .mp3, and .pdf. These files require proper parsing. Autogen’s tool was utilized for this purpose due to its effectiveness.

The Autogen team’s open-source contributions significantly accelerated the development process by providing these tools.

c. Code interpreter

This was not needed, as the agent naturally generates and executes Python code, as detailed below.

Code Agent 🧑💻

Why a Code Agent?

As demonstrated by Wang et al. (2024), allowing an agent to express its actions in code offers several benefits over dictionary-like outputs such as JSON. A primary advantage is that code provides a highly optimized method for articulating complex action sequences. If a more rigorous way to express detailed actions existed beyond current programming languages, it would likely have emerged as a new language!

This highlights several advantages of using code:

- Code actions are much more concise than JSON.

- Need to run 4 parallel streams of 5 consecutive actions ? In JSON, you would need to generate 20 JSON blobs, each in their separate step; in Code it’s only 1 step.

- On average, the paper shows that Code actions require 30% fewer steps than JSON, which amounts to an equivalent reduction in the tokens generated. Since LLM calls are often the dimensioning cost of agent systems, it means your agent system runs are ~30% cheaper.

- Code enables to re-use tools from common libraries

- Using code gets better performance in benchmarks, due to two reasons:

- It’s a more intuitive way to express actions

- LLMs have lots of code in their training data, which possibly makes them more fluent in code-writing than in JSON writing.

These points were confirmed during experiments on agent_reasoning_benchmark.

Additional advantages were observed from recent experiments in building Transformers agents:

- It is much easier to store an element as a named variable in code. For example, need to store this rock image generated by a tool for later use?

- In code, this is straightforward: using “rock_image = image_generation_tool(“A picture of a rock”)” stores the variable under the key “rock_image” in the dictionary of variables. The LLM can then reference its value in any code block by using “rock_image”.

- With JSON, complex workarounds would be necessary to name and store the image for later access. For example, one might save the output of an image generation tool under “image_{i}.png” and rely on the LLM to infer that image_4.png corresponds to a previous tool call. Alternatively, the LLM could output an “output_name” key to specify the variable’s storage name, which would complicate the JSON action structure.

- Agent logs are considerably more readable.

Implementation of Transformers Agents’ CodeAgent

LLM-generated code can be unsafe to execute directly. Without proper guardrails, an LLM might generate code that attempts to delete personal files or share private audio, for example!

To ensure secure code execution for agents, a bottom-up approach was preferred over the typical top-down method of using a full interpreter with forbidden actions.

Instead, a LLM-safe Python interpreter was built from the ground up. Given a Python code block from the LLM, the interpreter processes the Abstract Syntax Tree representation of the code, provided by the ast Python module. It executes tree nodes sequentially, halting at any unauthorized operation.

For instance, an import statement first verifies if it is explicitly listed in the user-defined `authorized_imports`; otherwise, it is not executed. A default list of built-in standard Python functions, including `print` and `range`, is provided. Any function outside this list will not execute unless explicitly authorized by the user. For example, `open` (as in `with open(“path.txt”, “w”) as file:`) is not authorized.

Upon encountering a function call (ast.Call), if the function name matches a user-defined tool, the tool is invoked with its arguments. If it’s another previously defined and allowed function, it executes normally.

Several adjustments were made to assist LLM usage of the interpreter:

- The number of operations during execution is capped to prevent infinite loops from LLM-generated code. A counter increments with each operation, and execution is interrupted if a threshold is reached.

- The number of lines in print outputs is capped to prevent excessive context length for the LLM. For example, if an LLM reads a 1M-line text file and attempts to print every line, the output will be truncated to prevent agent memory overload.

Basic multi-agent orchestration

Web browsing generates a significant amount of context, much of which is often irrelevant. For instance, in the GAIA question mentioned, the crucial piece of information is the image of the painting “Embroidery from Uzbekistan”; surrounding content, such as the blog post it was found on, is generally unhelpful for the overall task solving.

To address this, a multi-agent approach is beneficial. For example, a manager agent can handle the higher-level task and delegate specific web search tasks to a web search agent. The web search agent then returns only the pertinent search results, preventing the manager from being overwhelmed with irrelevant data.

This multi-agent orchestration was implemented in the workflow:

- The top level agent is a ReactCodeAgent. It natively handles code since its actions are formulated and executed in Python. It has access to the following tools:

- file_inspector to read text files, with an optional question argument to not return the whole content of the file but only return its answer to the specific question based on the content

- visualizer to specifically answer questions about images.

- search_agent to browse the web. More specifically, this Tool is just a wrapper around a Web Search agent, which is a JSON agent (JSON still works well for strictly sequential tasks, like web browsing where you scroll down, then navigate to a new page, and so on). This agent in turn has access to the web browsing tools:

- informational_web_search

- page_down

- find_in_page

- … (full list at this line)

Embedding an agent as a tool represents a straightforward approach to multi-agent orchestration. This method proved surprisingly effective!

Planning component 🗺️

With numerous planning strategies available, a relatively simple plan-ahead workflow was chosen. Every N steps, two elements are generated:

- a summary of facts known or derivable from context, and facts needing discovery

- a step-by-step plan to solve the task, given fresh observations and the factual summary above

The parameter N can be adjusted for optimal performance on the target use case; N=2 was selected for the manager agent and N=5 for the web search agent.

An interesting finding was that omitting the previous plan as input improved the score. This suggests that LLMs can be strongly biased by contextual information. If an old plan is present in the prompt, an LLM might heavily reuse it rather than re-evaluating and generating a new plan when necessary.

Both the factual summary and the plan serve as additional context for generating the next action. Planning helps an LLM select a more effective trajectory by presenting all necessary steps and the current state of the task.

Results 🏅

The final code used for the submission is available here.

A score of 44.2% was achieved on the validation set, positioning Transformers Agent’s ReactCodeAgent as #1 overall, 4 points ahead of the second-place entry. On the test set, a score of 33.3% was obtained, ranking it #2, ahead of Microsoft Autogen’s submission, and achieving the best average score on the challenging Level 3 questions.

This data supports the effectiveness of Code actions. Given their efficiency, Code actions are expected to become the standard for agents defining their actions, potentially replacing JSON/OAI formats.

To current knowledge, LangChain and LlamaIndex do not natively support Code actions. Microsoft’s Autogen offers some support for Code actions (executing code in docker containers), but it appears to be supplementary to JSON actions. Transformers Agents stands out as the only library to centralize this format.

Next steps

This article outlines ongoing efforts to improve Transformers Agents across several areas:

- LLM engine: The submission utilized GPT-4o (alas), without any fine-tuning. It is hypothesized that a fine-tuned open-source model could eliminate parsing errors and achieve a higher score!

- Multi-agent orchestration: The current approach is straightforward; more seamless orchestration could lead to significant advancements!

- Web browser tool: Using the selenium package, a web browser could be developed that handles cookie banners and loads JavaScript, thereby accessing many currently inaccessible pages.

- Improve planning further: Ablation tests are being conducted with other options from the literature to determine the most effective method. Alternative implementations of existing components and new components are planned for exploration. Updates will be published as more insights become available!