Since October 2010, Stack Exchange sites operated on physical hardware within a New York City (specifically, New Jersey) datacenter. The original server was reportedly displayed on a wall with a plaque, akin to a cherished item. Information regarding server rack updates and images has been previously shared over the years.

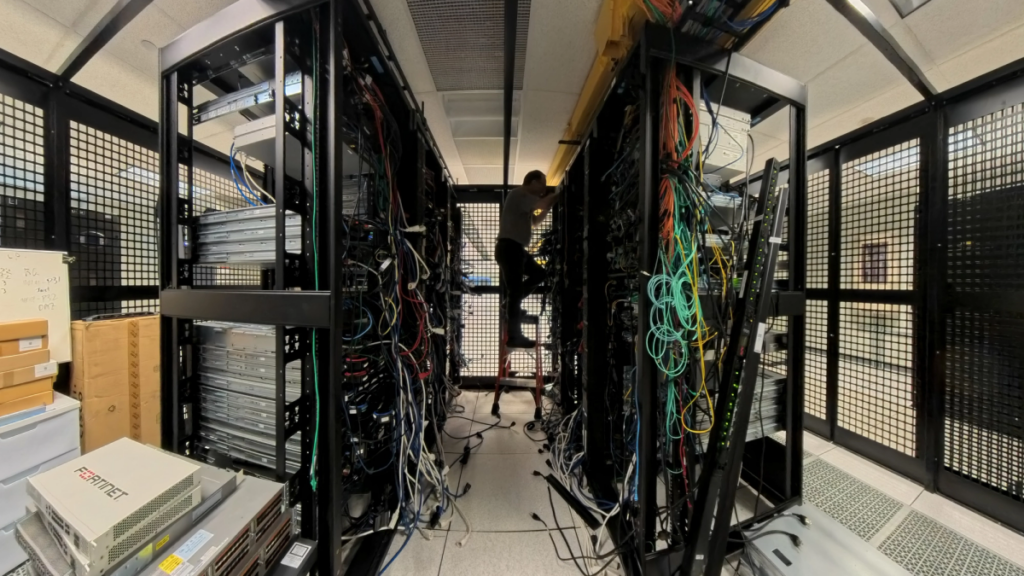

For nearly 16 years, the Site Reliability Engineering (SRE) team managed all datacenter operations, encompassing physical servers, cabling, racking, and replacing failed disks. This work necessitated on-site presence at the datacenter for hardware maintenance.

All sites have since transitioned to the cloud. Servers are now treated as scalable resources rather than unique machines. Physical visits to the New Jersey datacenter for hardware replacement or reboots are no longer required, following recent decommissioning.

On July 2nd, in preparation for the datacenter’s closure, all servers were unracked, cables unplugged, marking the end of these machines’ operational life. For several years, there had been a strategic plan to adopt cloud infrastructure. Stack Overflow for Teams successfully migrated to Azure in 2023. The public sites (Stack Overflow and the Stack Exchange network) subsequently moved to Google Cloud. This transition was accelerated when the datacenter vendor in New Jersey announced its closure, requiring vacating the premises by July 2025.

Another datacenter, located in Colorado, was decommissioned in June. This facility primarily served disaster recovery purposes, which became redundant. Stack Overflow now operates without physical datacenters or offices, functioning entirely in the cloud and remotely.

The SRE team and other contributors were instrumental in achieving this migration. Future blog posts will detail the process of moving Stack Exchange sites to the cloud.

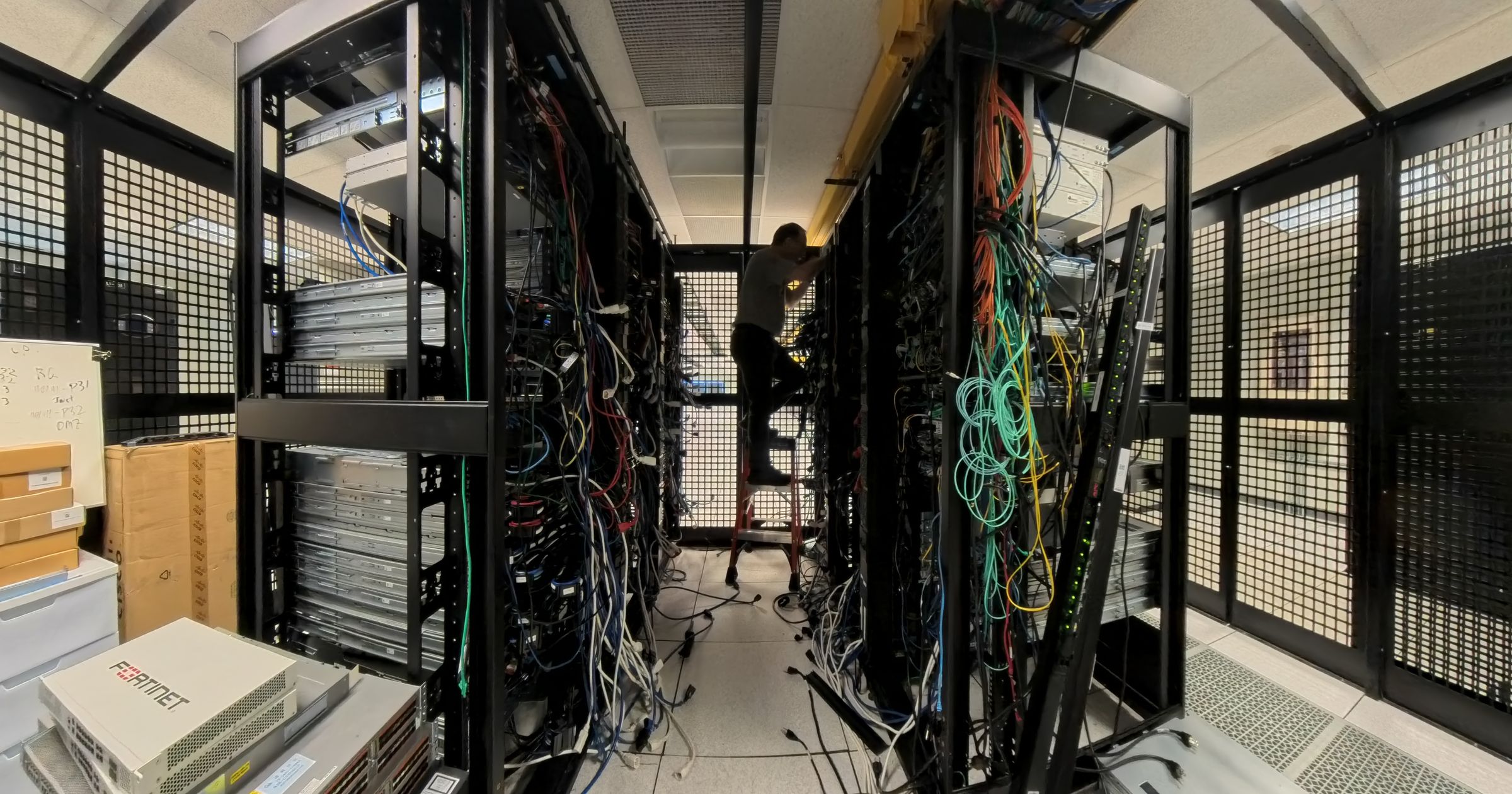

Approximately 50 servers were located at this facility. The appearance of the servers at the start of the decommissioning day is shown:

With eight or more cables per machine and over 50 machines, the total number of cables was substantial. The images display the considerable volume of cables. Despite being neatly organized in individual ‘arms’ for each server, the process of de-cabling this many hosts was labor-intensive.

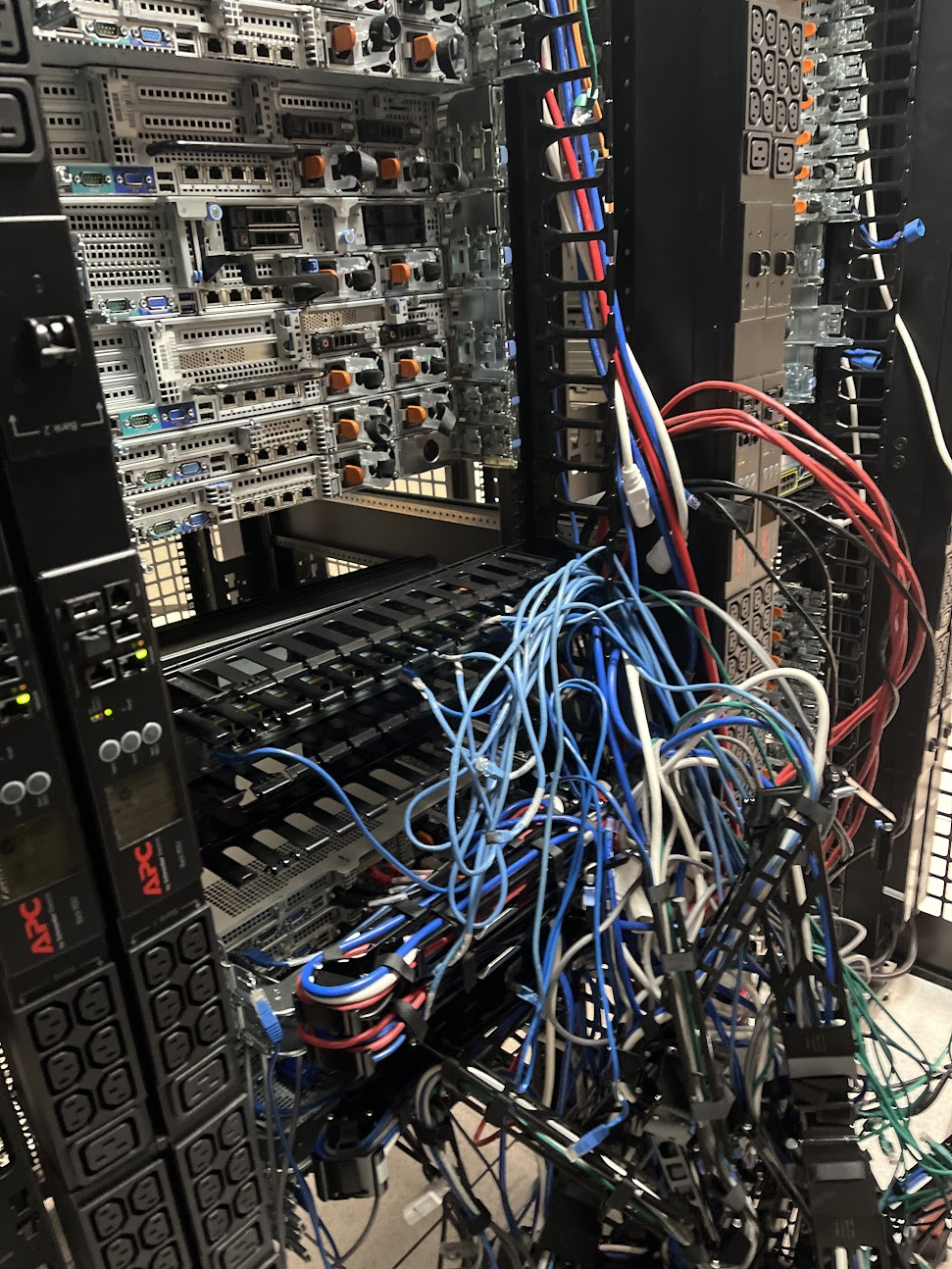

The reason for numerous cables per machine is illustrated in a staged photograph detailing each connection:

- Blue: A single 1G ethernet cable for the management network (remote access).

- Black: One cable transmitting VGA video and USB (keyboard and mouse) signals to a “KVM switch.” This switch allowed connection to the keyboard, video, and mouse of any machine in the datacenter, providing remote access in emergencies.

- Red: Two 10G ethernet cables for the primary network.

- Black: Two additional 10G ethernet cables for the primary network, used only on machines requiring extra bandwidth, such as SQL servers.

- White+blue: Two power cables, each connected to a different circuit for redundancy.

Hardware enthusiasts may find these details interesting. The disassembly process followed. Josh Zhang, a Staff Site Reliability Engineer, expressed a sense of nostalgia, noting, “The new web tier servers were installed a few years ago as part of planned upgrades. It’s bittersweet to be the one deracking them as well.” This sentiment was likened to the story of Old Yeller.

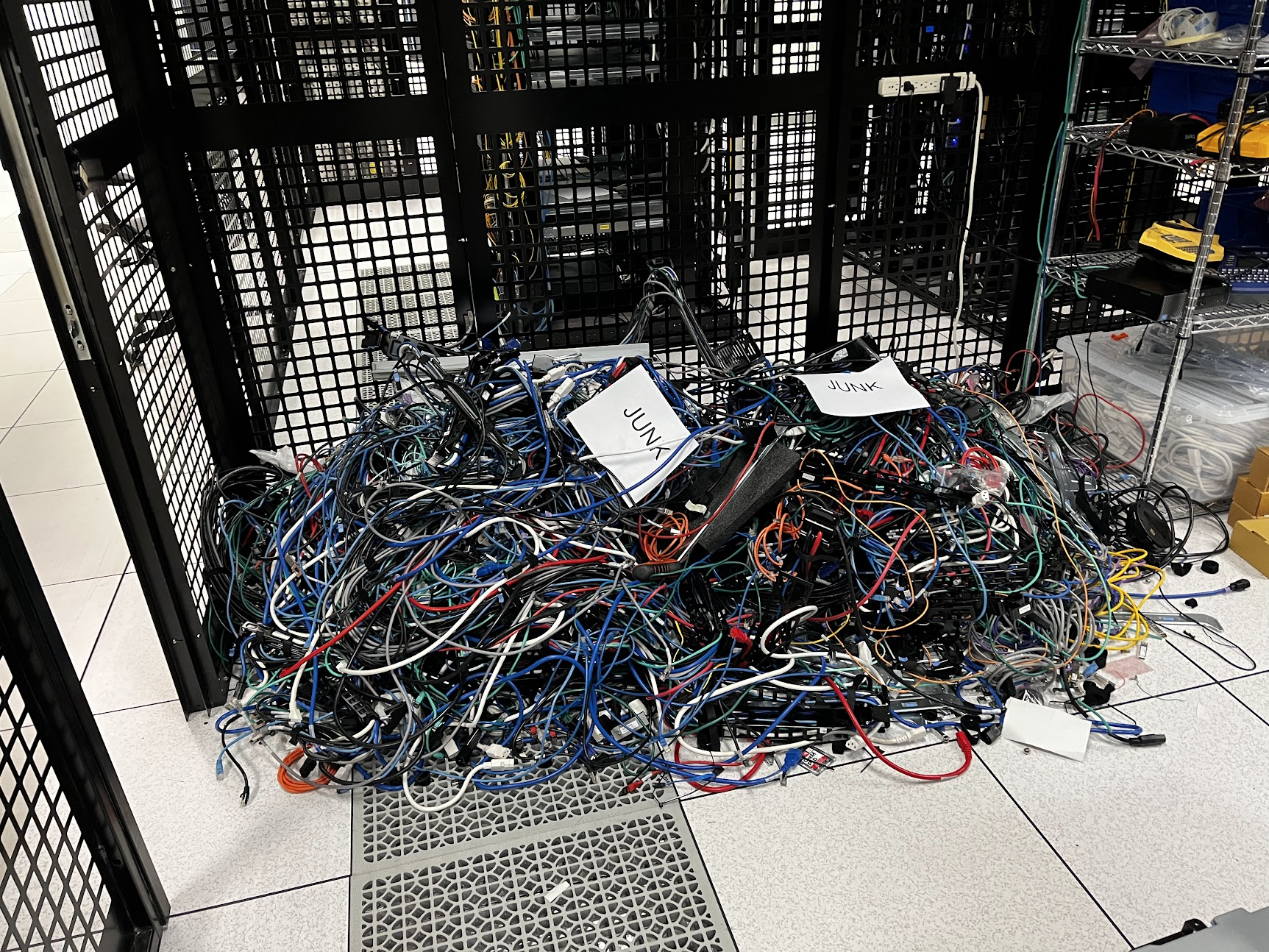

Typically, datacenter decommissioning involves preserving some machines for relocation. However, in this instance, all machines were slated for disposal. This allowed for rapid and less cautious handling. Any equipment within the designated area was sent to a disposal company. For security and to protect user and customer Personally Identifiable Information (PII), all items were shredded or destroyed, with nothing being retained. Ellora Praharaj, Director of Reliability Engineering, commented, “No need to be gentle anymore.”

Clearing a rack involved two steps: de-cabling all machines, followed by un-racking them. Images show racks undergoing de-cabling. Salvageable items had already been removed, eliminating the need for meticulousness or caution. Racks are depicted in various stages of de-cabling, after which the bulk of cables were added to a large pile.

For those who have experienced difficulty disconnecting an RJ45 cable, this process offered the chance to simply cut the cables rather than struggling with stubborn release tabs.

A pile of discarded items accumulated. The initial de-cabling approach involved placing everything in a room corner until it became apparent that the exit might be obstructed. Subsequently, items were piled vertically rather than horizontally.

All servers and network devices were arranged into seven distinct piles on the floor.

A question arises whether this image represents the ‘before’ state from around 2015 during initial setup or the ‘after’ state following decommissioning.