Llamafile 0.8.14, the newest iteration of the widely used open-source AI tool, has been released. As a Mozilla Builders project, Llamafile transforms model weights into efficient, self-contained executables compatible with most computers, enabling users to leverage open LLMs effectively with their existing hardware.

New Chat Interface Introduced

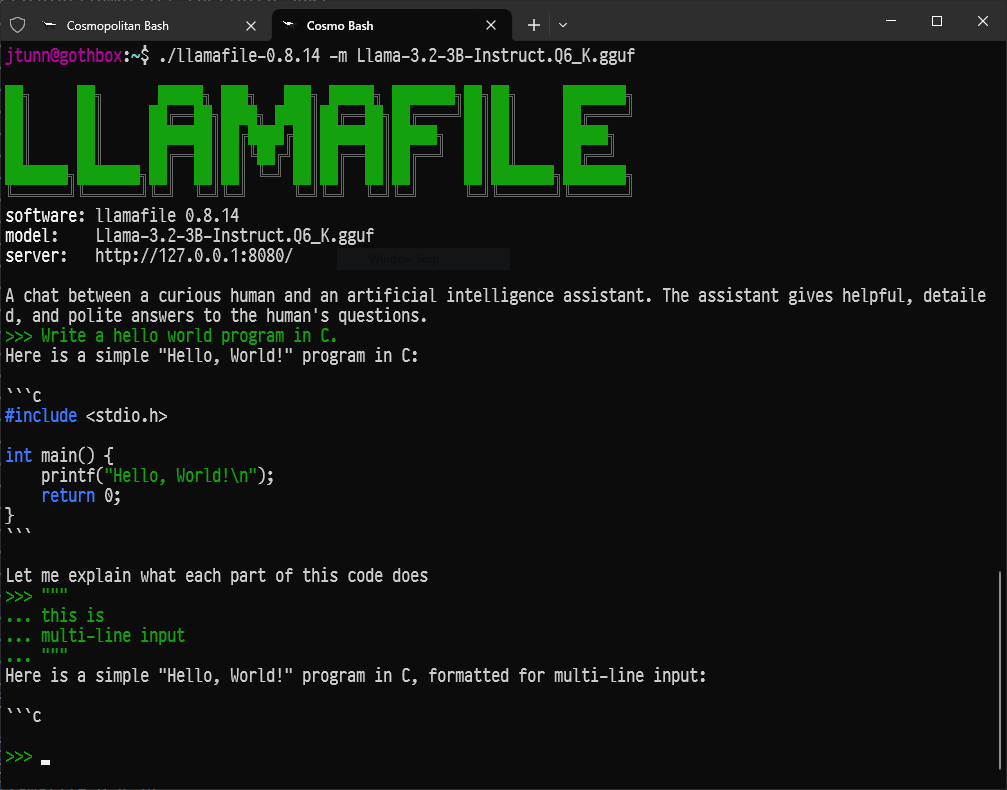

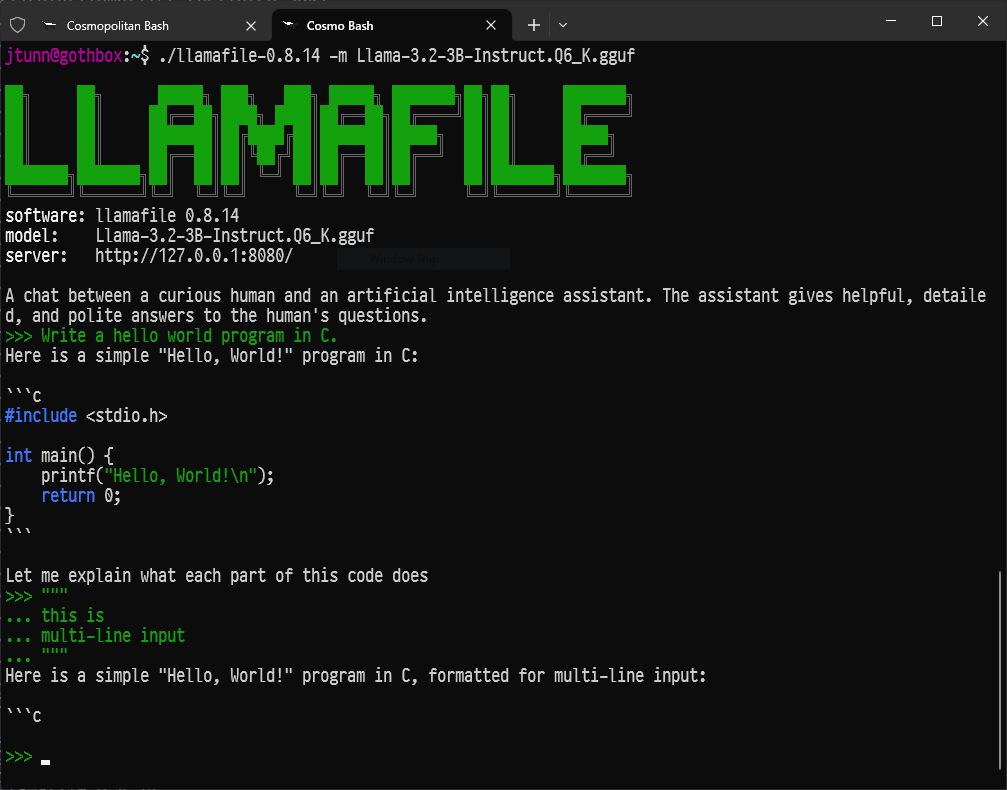

A significant addition in this release is a vibrant new command-line chat interface. Upon launching a Llamafile, this new chat UI automatically appears in the terminal. It offers a faster, more user-friendly, and generally simpler experience compared to the previous default web-based interface. The original web interface, inherited from the upstream llama.cpp project, remains accessible and supports various features, including image uploads, by directing a browser to port 8080 on localhost.

Additional Recent Enhancements

Beyond the new chat UI, numerous other improvements have been implemented. Since the last update, lead developer Justine Tunney has delivered several new releases, each contributing significantly to the project’s advancement. Key highlights include:

Llamafiler: A new, from-scratch OpenAI-compatible API server, Llamafiler, is under development. This server aims to be more reliable, stable, and considerably faster than its predecessor. The embeddings endpoint has already been released, demonstrating a threefold speed increase compared to the one in llama.cpp. Work is ongoing on the completions endpoint, after which Llamafiler is expected to become the default API server for Llamafile.

Performance Improvements: Llamafile has seen substantial speed enhancements in recent months, thanks in part to open-source contributors such as k-quant inventor @Kawrakow. Notably, pre-fill (prompt evaluation) speed has significantly improved across various architectures:

- Intel Core i9 improved from 100 tokens/second to 400 (a 4x increase).

- AMD Threadripper saw an increase from 300 tokens/second to 2,400 (an 8x improvement).

- The Raspberry Pi 5 also experienced a significant leap, from 8 tokens/second to 80 (a 10x boost).

These enhancements, coupled with the new high-speed embedding server, position Llamafile as a leading solution for executing complex local AI applications that utilize techniques such as retrieval augmented generation (RAG).

Support for Powerful New Models: Llamafile consistently integrates advancements in open LLMs, now supporting dozens of new models and architectures. These range in size from 405 billion parameters down to 1 billion. Some of the new Llamafiles available for download on Hugging Face include:

- Llama 3.2 1B and 3B: These models deliver impressive performance and quality despite their compact size.

demonstrates it in action.

- Llama 3.1 405B: This “frontier model” can be run locally with adequate system RAM.

- OLMo 7B: Developed by the Allen Institute, OLMo stands out as one of the first truly open and transparent models available.

- TriLM: A novel “1.58 bit” tiny model optimized for CPU inference, suggesting a future where traditional matrix multiplication may become less dominant.

Whisperfile: Speech-to-Text in a Single File: With contributions from community member @cjpais, Whisperfile has been developed. Similar to how Llamafile transformed llama.cpp, Whisperfile converts whisper.cpp into a multi-platform executable that operates across various environments. This makes it straightforward to leverage OpenAI’s Whisper technology for efficient speech-to-text conversion, regardless of the user’s hardware.

Community Contributions

Llamafile aims to establish a robust foundation for developing advanced local AI applications. Justine Tunney’s efforts on the new Llamafiler server are crucial to this objective, as is the continuous work to support new models and enhance inference performance for a broad user base. Many significant advancements in these and other areas have originated from the community, with contributors such as @Kawrakow, @cjpais, @mofosyne, and @Djip007 consistently making valuable contributions.