- Ax 1.0 has been released, an open-source platform that uses machine learning to automatically guide complex, resource-intensive experimentation.

- The platform is utilized widely to improve AI models, tune production infrastructure, and accelerate advances in ML and even hardware design.

- An accompanying paper, “Ax: A Platform for Adaptive Experimentation” explains Ax’s architecture, methodology, and how it compares to other state-of-the-art black-box optimization libraries.

Researchers often face difficulties in understanding and optimizing AI models or systems with numerous possible configurations. This issue is common in complex, interacting systems like those found in modern AI development. Efficient experimentation is crucial when evaluating a single configuration requires significant resources or time.

Adaptive experimentation provides a solution by actively suggesting new configurations for sequential evaluation, building on insights from prior assessments.

Version 1.0 of Ax, an open-source adaptive experimentation platform, was released this year. It utilizes machine learning to guide and automate the experimentation process. Ax employs Bayesian optimization, allowing researchers and developers to conduct efficient experiments and identify optimal configurations for their systems.

Alongside this release, a paper titled “Ax: A Platform for Adaptive Experimentation” was published. It details Ax’s architecture, explains its optimization methodology, and compares its performance with other black-box optimization libraries.

Ax has been successfully applied across various disciplines, including:

- Traditional machine learning tasks, such as hyperparameter optimization and architecture search.

- Addressing key challenges in GenAI, including discovering optimal data mixtures for training AI models.

- Tuning infrastructure or compiler flags in production settings.

- Optimizing design parameters in physical engineering tasks, such as designing AR/VR devices.

By using Ax, developers can conduct complex experiments with state-of-the-art methods, leading to a deeper understanding and optimization of their systems.

How to Get Started With Ax

To begin using Ax for efficient parameter tuning in complex systems, install the latest version of the library using `pip install ax-platform`. Further resources, including a quickstart guide, tutorials, and detailed explanations of Ax’s underlying methods, are available on the Ax website.

Ax Is for Real World Experimentation

Adaptive experiments, while highly beneficial, can be difficult to implement. They necessitate advanced machine learning techniques for optimization and specialized infrastructure for managing experiment states, automating orchestration, and providing analysis. Furthermore, experimental goals often extend beyond improving a single metric, typically involving a careful balance of multiple objectives, constraints, and guardrails.

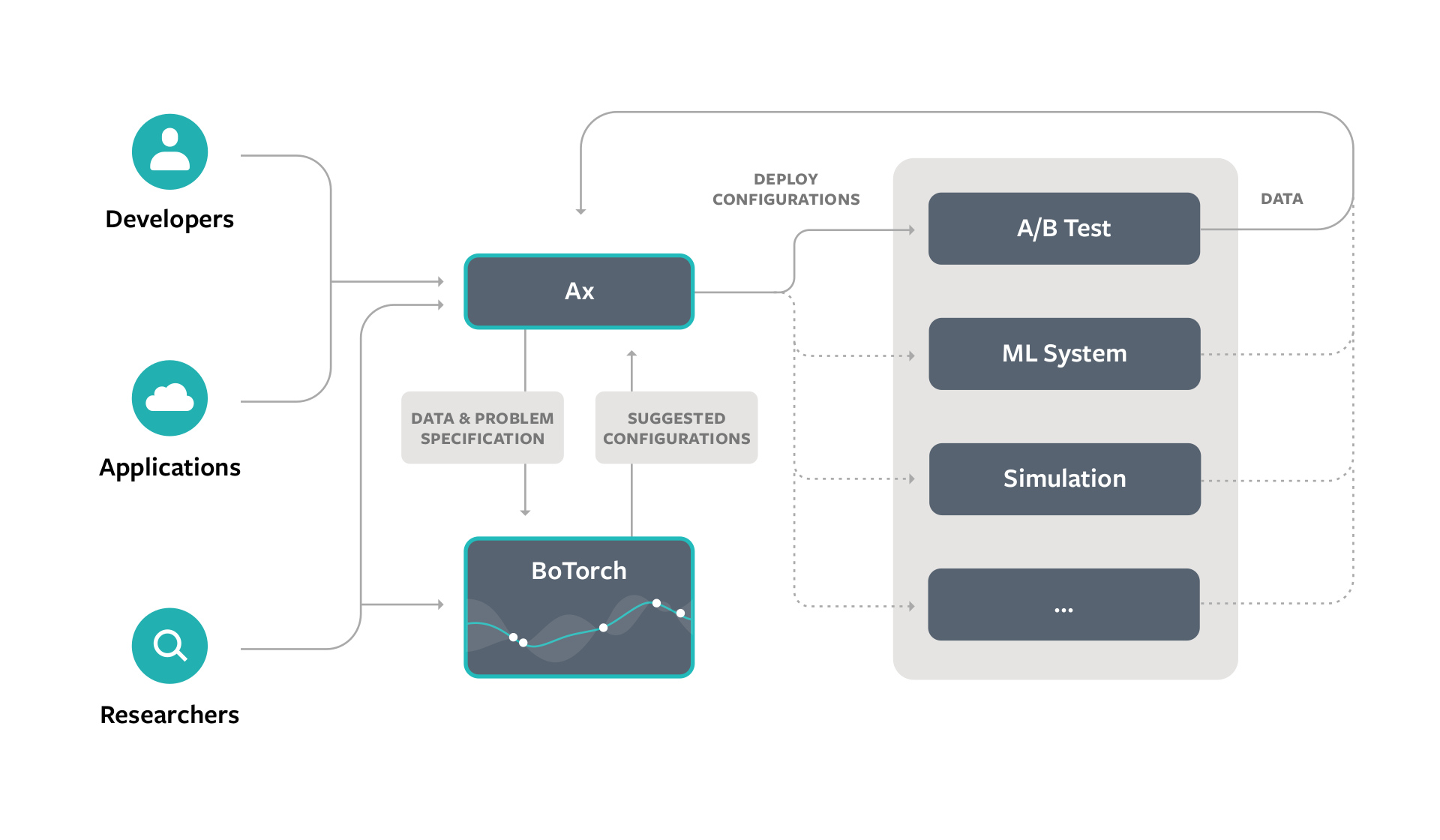

Ax was developed to enable users to easily configure and execute these dynamic experiments with state-of-the-art techniques. It also provides a robust platform for researchers to integrate advanced methods into production systems.

Ax for Understanding

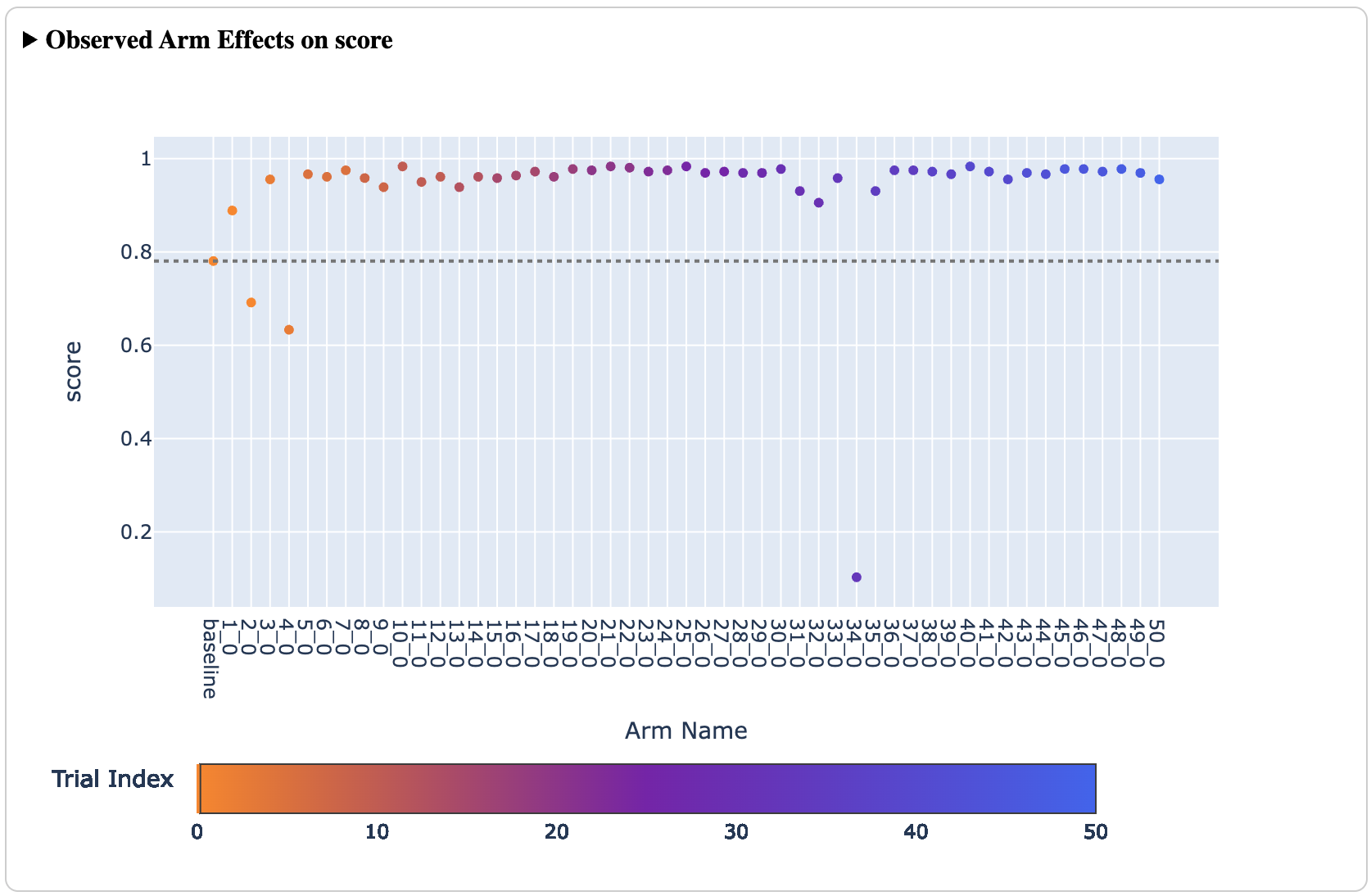

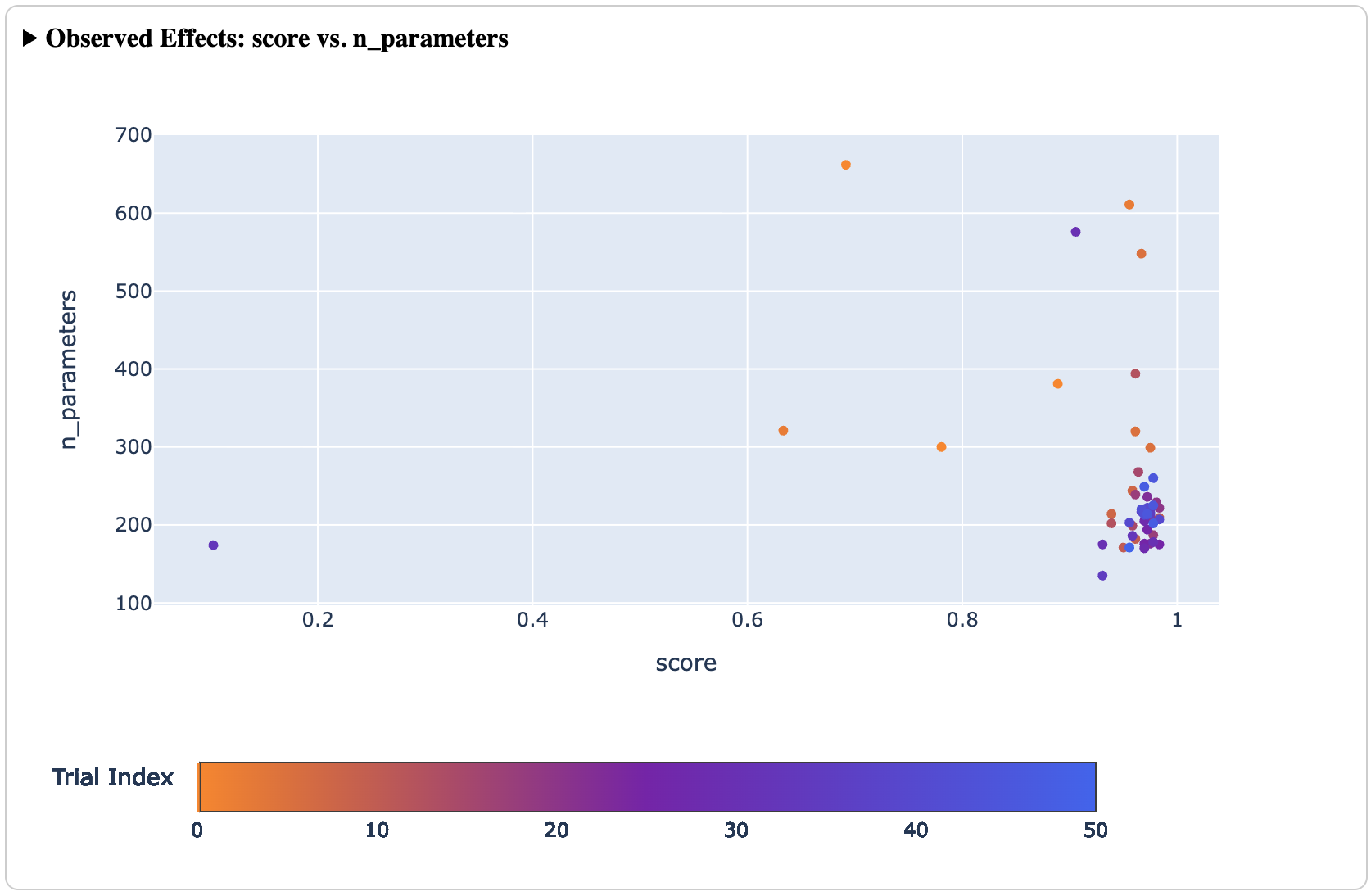

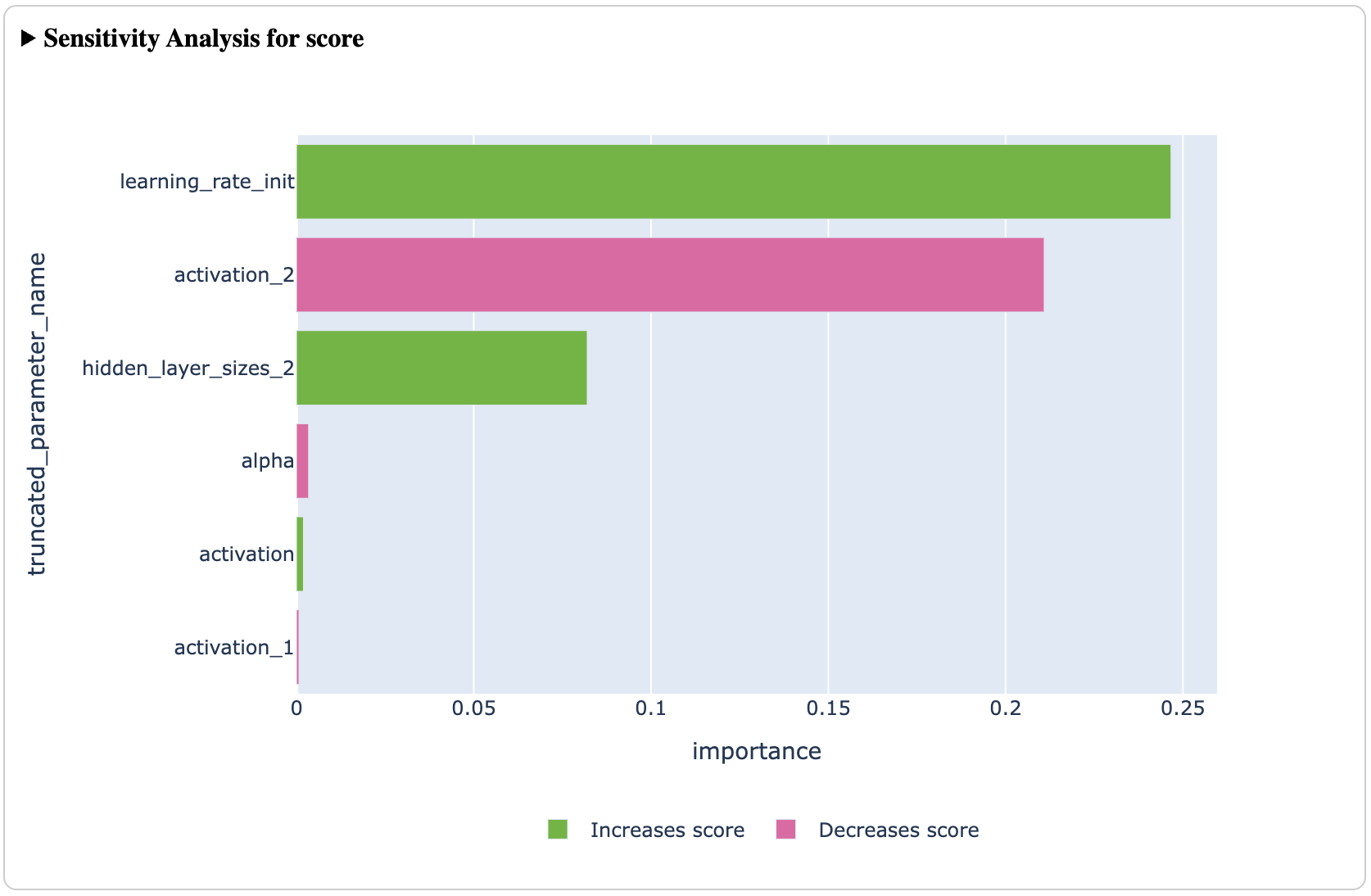

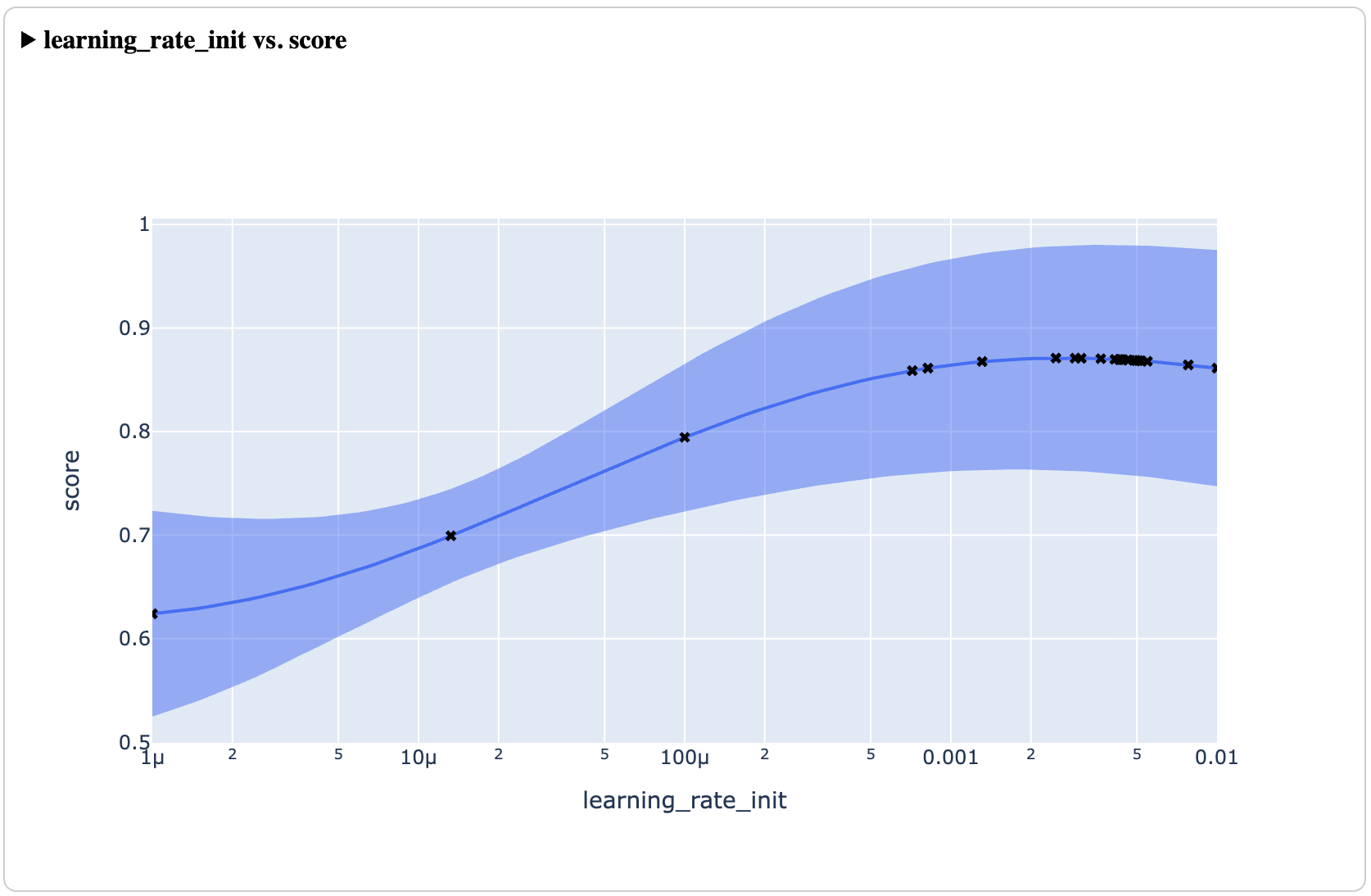

Beyond efficiently identifying optimal configurations, Ax serves as a valuable tool for understanding the system under optimization. It offers a suite of analyses, including plots and tables, to help users track optimization progress, visualize tradeoffs between metrics using a Pareto frontier, observe the impact of parameters across the input space, and determine each parameter’s contribution to the results through sensitivity analysis.

These tools equip experimenters with an optimal configuration for deployment and a deeper understanding of their system, which can guide future decisions.

How Ax Works

Ax primarily utilizes Bayesian optimization, an adaptive experimentation method effective at balancing exploration (learning how new configurations perform) and exploitation (refining previously successful configurations). Ax’s Bayesian optimization components are implemented using BoTorch.

Bayesian optimization is an iterative method for solving the global optimization problem , without making assumptions about the function ‘f’. This involves optimizing systems by evaluating candidate configurations

, building a surrogate model from this data, using the model to identify the next most promising configuration, and repeating until an optimal solution is found or the experimental budget is depleted.

Typically, Ax employs a Gaussian process (GP) as the surrogate model within the Bayesian optimization loop. This flexible model can make predictions and quantify uncertainty, proving particularly effective with limited data. Ax then uses an acquisition function, specifically from the expected improvement (EI) family, to propose subsequent candidate configurations by estimating the expected value of a new configuration relative to the best one previously evaluated.

The animation below illustrates this loop, with a GP modeling the goal metric (blue) and EI (black). The highest EI value indicates the next ‘x’ to evaluate. After evaluating the new ‘x’, the GP is re-fit with the new data point, and the next EI value is calculated.

This one-dimensional example can be extended to multiple input and output dimensions, enabling Ax to optimize problems with numerous (potentially hundreds) of tunable parameters and outcomes. The surrogate-based approach particularly excels in higher-dimensional settings, where covering the search space becomes exponentially more expensive for other methods.

Further details on Bayesian optimization are available on Ax website’s Introduction to Bayesian Optimization page.

How Ax Is Used

Ax has been widely deployed to address challenging optimization problems. It is used by numerous developers for tasks such as hyperparameter optimization and architecture search for AI models, tuning parameters for online recommender and ranking models, infrastructure optimizations, and simulation optimization for AR and VR hardware design.

These experiments optimize complex goals using sophisticated algorithms. For example, multi-objective optimization has been employed to simultaneously enhance a machine learning model’s accuracy while reducing its resource consumption. When researchers needed to reduce the size of natural language models for the first generation of Ray-Ban Stories, Ax was utilized to find models that optimally balanced size and performance. Constrained optimization techniques are also applied for tuning recommender systems, optimizing key metrics while preventing regressions.

Recently, Ax contributed to the design of new faster-curing, low-carbon concrete mixes, which have been deployed at data center construction sites. These innovations are significant in progressing towards a net-zero emissions goal by 2030.

Challenges exist across various domains where system quality is influenced by complex parameter interactions that are difficult to understand without experimentation, and where experimentation itself is costly. Ax addresses these by using a data-driven approach to adapt experiments, allowing for efficient and effective problem-solving.

The Future of Ax

Continuous efforts are made to enhance Ax by developing new features for innovative experiment designs, optimization methods, and integrations with external platforms. Ax is an open-source project (MIT license), and both practitioner and research communities are encouraged to contribute. Contributions can include improved surrogate models or acquisition functions, extensions for specific research applications that benefit the broader community, or bug fixes and core capability enhancements. The team can be contacted via Github Issues.