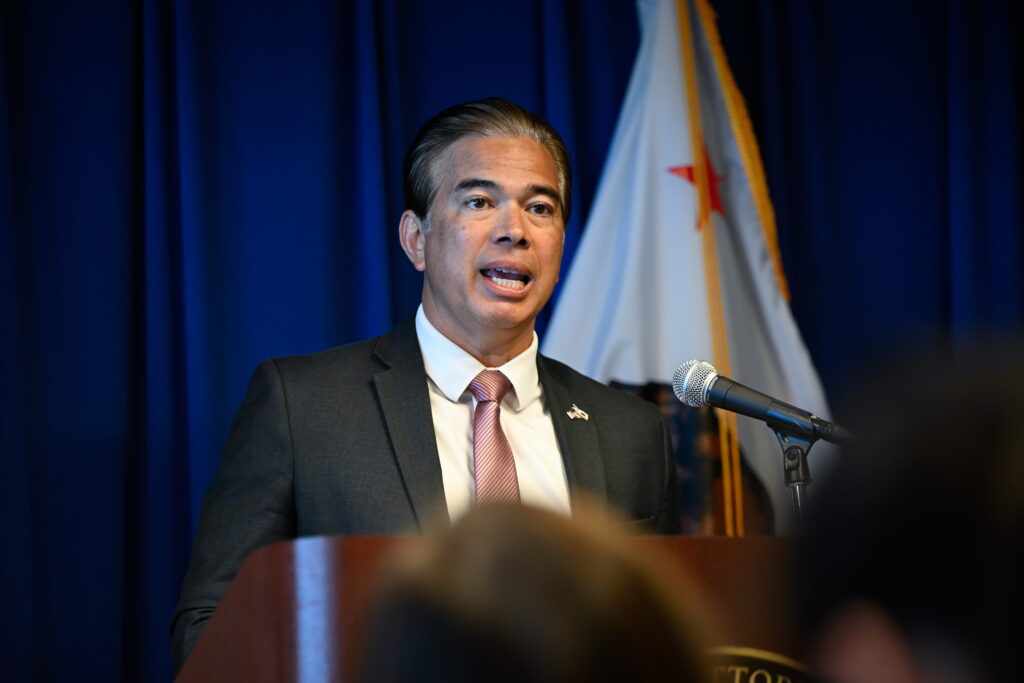

California Attorney General Rob Bonta announced an investigation into xAI on Wednesday. The probe addresses allegations that xAI’s artificial intelligence model, Grok, is being used to create nonconsensual sexually explicit images of women and children on a large scale. This action represents the latest regulatory effort to tackle AI-generated deepfakes.

The California investigation specifically targets Grok’s “spicy mode,” a feature designed to produce explicit content, which xAI has promoted as a unique aspect of its platform. Bonta’s office noted recent news reports documenting numerous instances where users manipulated ordinary online photos of women and children to generate sexualized images without the subjects’ knowledge or consent.

Bonta stated in a release, “The avalanche of reports detailing the non-consensual, sexually explicit material that xAI has produced and posted online in recent weeks is shocking. This material, which depicts women and children in nude and sexually explicit situations, has been used to harass people across the internet. I urge xAI to take immediate action to ensure this goes no further. There is zero tolerance for the AI-based creation and dissemination of nonconsensual intimate images or of child sexual abuse material.”

The investigation will assess whether xAI violated California law by developing and maintaining features that enable the creation of such content. Bonta affirmed that his office would “use all the tools at his disposal to keep California’s residents safe,” though specific statutes potentially violated were not detailed.

xAI, founded by Elon Musk, also owns the social media platform X, where images generated by Grok have reportedly circulated.

The company has not yet publicly responded to the investigation announcement. Musk posted on Wednesday that he was “not aware of any naked underage images generated by Grok. Literally zero.”

This announcement follows the Senate’s unanimous passage of the DEFIANCE Act a day prior. This bill would grant victims of nonconsensual sexually explicit deepfakes the right to pursue civil action against those who produce or distribute such content. The legislation now moves to the House, where similar measures stalled in 2024 despite previous Senate approval.

The Senate’s approval of the DEFIANCE Act signifies a rare instance of bipartisan agreement on technology regulation. The legislation, introduced by Sens. Dick Durbin, D-Ill., and Lindsey Graham, R-S.C., passed without objection during a unanimous consent request on the Senate floor.

The bill aims to establish federal civil liability for individuals who knowingly produce, distribute, or possess with intent to distribute nonconsensual sexually explicit digital forgeries. Rep. Alexandria Ocasio-Cortez, D-N.Y., a recognized victim of explicit deepfakes, introduced companion legislation in the House, garnering support from both Republican and Democratic members.

The technology used to create such content has become increasingly accessible to the general public, reducing the barriers that once limited deepfake production to those with specialized technical knowledge.

California has become a key player in AI regulation, with state lawmakers enacting several bills to address AI safety concerns. Bonta has actively engaged with issues concerning AI and children, including meeting with OpenAI executives and sending letters to major AI companies regarding sexually inappropriate interactions between AI chatbots and young people.

The California investigation occurs concurrently with an announcement from the United Kingdom earlier this week, indicating its own investigation into the spread of deepfakes on X.