Developing effective agentic AI requires a new research playbook. When systems plan, decide, and act on a user’s behalf, UX moves beyond usability testing into the realm of trust, consent, and accountability. This article outlines the research methods needed to design agentic AI systems responsibly.

Agentic AI stands ready to transform customer experience and operational efficiency, necessitating a new strategic approach from leadership. This evolution in artificial intelligence empowers systems to plan, execute, and persist in tasks, moving beyond simple recommendations to proactive action. For UX teams, product managers, and executives, understanding this shift is crucial for unlocking opportunities in innovation, streamlining workflows, and redefining how technology serves people.

It’s easy to confuse Agentic AI with Robotic Process Automation (RPA), which is technology that focuses on rules-based tasks performed on computers. The distinction lies in rigidity versus reasoning. RPA is excellent at following a strict script: if X happens, do Y. It mimics human hands. Agentic AI mimics human reasoning. It does not follow a linear script; it creates one.

Consider a recruiting workflow. An RPA bot can scan a resume and upload it to a database. It performs a repetitive task perfectly. An Agentic system looks at the resume, notices the candidate lists a specific certification, cross-references that with a new client requirement, and decides to draft a personalized outreach email highlighting that match. RPA executes a predefined plan; Agentic AI formulates the plan based on a goal. This autonomy separates agents from the predictive tools that have been used for the last decade.

Another example is managing meeting conflicts. A predictive model integrated into a user’s calendar might analyze their meeting schedule and the schedules of colleagues. It could then suggest potential conflicts, such as two important meetings scheduled at the same time, or a meeting scheduled when a key participant is on vacation. It provides information and flags potential issues, but the user is responsible for taking action.

An agentic AI, in the same scenario, would go beyond just suggesting conflicts to avoid. Upon identifying a conflict with a key participant, the agent could act by:

- Checking the availability of all necessary participants.

- Identifying alternative time slots that work for everyone.

- Sending out proposed new meeting invitations to all attendees.

- If the conflict is with an external participant, the agent could draft and send an email explaining the need to reschedule and offering alternative times.

- Updating the user’s calendar and the calendars of colleagues with the new meeting details once confirmed.

This agentic AI understands the goal (resolving the meeting conflict), plans the steps (checking availability, finding alternatives, sending invites), executes those steps, and persists until the conflict is resolved, all with minimal direct user intervention. This demonstrates the “agentic” difference: the system takes proactive steps for the user, rather than just providing information to the user.

Agentic AI systems understand a goal, plan a series of steps to achieve it, execute those steps, and even adapt if things go wrong. Think of it like a proactive digital assistant. The underlying technology often combines large language models (LLMs) for understanding and reasoning, with planning algorithms that break down complex tasks into manageable actions. These agents can interact with various tools, APIs, and even other AI models to accomplish their objectives, and critically, they can maintain a persistent state, meaning they remember previous actions and continue working towards a goal over time. This makes them fundamentally different from typical generative AI, which usually completes a single request and then resets.

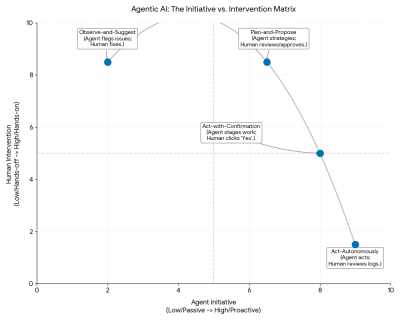

A Simple Taxonomy of Agentic Behaviors

Agent behavior can be categorized into four distinct modes of autonomy. While these often look like a progression, they function as independent operating modes. A user might trust an agent to act autonomously for scheduling, but keep it in “suggestion mode” for financial transactions.

These levels were derived by adapting industry standards for autonomous vehicles (SAE levels) to digital user experience contexts.

Observe-and-Suggest

The agent functions as a monitor. It analyzes data streams and flags anomalies or opportunities, but takes zero action.

DifferentiationUnlike the next level, the agent generates no complex plan. It points to a problem.

ExampleA DevOps agent notices a server CPU spike and alerts the on-call engineer. It does not know how or attempt to fix it, but it knows something is wrong.

Implications for design and oversightAt this level, design and oversight should prioritize clear, non-intrusive notifications and a well-defined process for users to act on suggestions. The focus is on empowering the user with timely and relevant information without taking control. UX practitioners should focus on making suggestions clear and easy to understand, while product managers need to ensure the system provides value without overwhelming the user.

Plan-and-Propose

The agent identifies a goal and generates a multi-step strategy to achieve it. It presents the full plan for human review.

DifferentiationThe agent acts as a strategist. It does not execute; it waits for approval on the entire approach.

ExampleThe same DevOps agent notices the CPU spike, analyzes the logs, and proposes a remediation plan:

- Spin up two extra instances.

- Restart the load balancer.

- Archive old logs.

The human reviews the logic and clicks “Approve Plan”.

Implications for design and oversightFor agents that plan and propose, design must ensure the proposed plans are easily understandable and that users have intuitive ways to modify or reject them. Oversight is crucial in monitoring the quality of proposals and the agent’s planning logic. UX practitioners should design clear visualizations of the proposed plans, and product managers must establish clear review and approval workflows.

Act-with-Confirmation

The agent completes all preparation work and places the final action in a staged state. It effectively holds the door open, waiting for a nod.

DifferentiationThis differs from “Plan-and-Propose” because the work is already done and staged. It reduces friction. The user confirms the outcome, not the strategy.

ExampleA recruiting agent drafts five interview invitations, finds open times on calendars, and creates the calendar events. It presents a “Send All” button. The user provides the final authorization to trigger the external action.

Implications for design and oversightWhen agents act with confirmation, the design should provide transparent and concise summaries of the intended action, clearly outlining potential consequences. Oversight needs to verify that the confirmation process is robust and that users are not being asked to blindly approve actions. UX practitioners should design confirmation prompts that are clear and provide all necessary information, and product managers should prioritize a robust audit trail for all confirmed actions.

Act-Autonomously

The agent executes tasks independently within defined boundaries.

DifferentiationThe user reviews the history of actions, not the actions themselves.

ExampleThe recruiting agent sees a conflict, moves the interview to a backup slot, updates the candidate, and notifies the hiring manager. The human only sees a notification: Interview rescheduled to Tuesday.

Implications for design and oversightFor autonomous agents, the design needs to establish clear pre-approved boundaries and provide robust monitoring tools. Oversight requires continuous evaluation of the agent’s performance within these boundaries, a critical need for robust logging, clear override mechanisms, and user-defined kill switches to maintain user control and trust. UX practitioners should focus on designing effective dashboards for monitoring autonomous agent behavior, and product managers must ensure clear governance and ethical guidelines are in place.

Figure 1: The Agentic Autonomy Matrix. This framework maps four distinct operating modes by correlating the level of agent initiative against the required amount of human intervention. (Large preview)

Figure 1: The Agentic Autonomy Matrix. This framework maps four distinct operating modes by correlating the level of agent initiative against the required amount of human intervention. (Large preview)

Let’s look at a real-world application in HR technology to see these modes in action. Consider an “Interview Coordination Agent” designed to handle the logistics of hiring.

- In Suggest ModeThe agent notices an interviewer is double-booked. It highlights the conflict on the recruiter’s dashboard: “Warning: Sarah is double-booked for the 2 PM interview.”

- In Plan ModeThe agent analyzes Sarah’s calendar and the candidate’s availability. It presents a solution: “I recommend moving the interview to Thursday at 10 AM. This requires moving Sarah’s 1:1 with her manager.” The recruiter reviews this logic.

- In Confirmation ModeThe agent drafts the emails to the candidate and the manager. It populates the calendar invites. The recruiter sees a summary: “Ready to reschedule to Thursday. Send updates?” The recruiter clicks “Confirm.”

- In Autonomous ModeThe agent handles the conflict instantly. It respects a pre-set rule: “Always prioritize candidate interviews over internal 1:1s.” It moves the meeting and sends the notifications. The recruiter sees a log entry: “Resolved schedule conflict for Candidate B.”

Research Primer: What To Research And How

Developing effective agentic AI demands a distinct research approach compared to traditional software or even generative AI. The autonomous nature of AI agents, their ability to make decisions, and their potential for proactive action necessitate specialized methodologies for understanding user expectations, mapping complex agent behaviors, and anticipating potential failures. The following research primer outlines key methods to measure and evaluate these unique aspects of agentic AI.

Mental-Model Interviews

These interviews uncover users’ preconceived notions about how an AI agent should behave. Instead of simply asking what users want, the focus is on understanding their internal models of the agent’s capabilities and limitations. The word “agent” should be avoided with participants. It carries sci-fi baggage or is a term too easily confused with a human agent offering support or services. Instead, frame the discussion around “assistants” or “the system.”

It is important to uncover where users draw the line between helpful automation and intrusive control.

- Method: Ask users to describe, draw, or narrate their expected interactions with the agent in various hypothetical scenarios.

- Key Probes (reflecting a variety of industries):

- To understand the boundaries of desired automation and potential anxieties around over-automation, ask:

- If a user’s flight is canceled, what would they want the system to do automatically? What would worry them if it did that without explicit instruction?

- To explore the user’s understanding of the agent’s internal processes and necessary communication, ask:

- Imagine a digital assistant is managing a user’s smart home. If a package is delivered, what steps do they imagine it takes, and what information would they expect to receive?

- To uncover expectations around control and consent within a multi-step process, ask:

- If a user asks their digital assistant to schedule a meeting, what steps do they envision it taking? At what points would they want to be consulted or given choices?

- To understand the boundaries of desired automation and potential anxieties around over-automation, ask:

- Benefits of the method: Reveals implicit assumptions, highlights areas where the agent’s planned behavior might diverge from user expectations, and informs the design of appropriate controls and feedback mechanisms.

Agent Journey Mapping:

Similar to traditional user journey mapping, agent journey mapping specifically focuses on the anticipated actions and decision points of the AI agent itself, alongside the user’s interaction. This helps to proactively identify potential pitfalls.

- Method: Create a visual map that outlines the various stages of an agent’s operation, from initiation to completion, including all potential actions, decisions, and interactions with external systems or users.

- Key Elements to Map:

- Agent Actions: What specific tasks or decisions does the agent perform?

- Information Inputs/Outputs: What data does the agent need, and what information does it generate or communicate?

- Decision Points: Where does the agent make choices, and what are the criteria for those choices?

- User Interaction Points: Where does the user provide input, review, or approve actions?

- Points of Failure: Crucially, identify specific instances where the agent could misinterpret instructions, make an incorrect decision, or interact with the wrong entity.

- Examples: Incorrect recipient (e.g., sending sensitive information to the wrong person), overdraft (e.g., an automated payment exceeding available funds), misinterpretation of intent (e.g., booking a flight for the wrong date due to ambiguous language).

- Recovery Paths: How can the agent or user recover from these failures? What mechanisms are in place for correction or intervention?

- Benefits of the method: Provides a holistic view of the agent’s operational flow, uncovers hidden dependencies, and allows for the proactive design of safeguards, error handling, and user intervention points to prevent or mitigate negative outcomes.

Figure 2: Agent Journey Map. Mapping the Agent Logic distinct from the System helps identify where the reasoning, not just the code, might fail. (Large preview)

Simulated Misbehavior Testing:

This approach is designed to stress-test the system and observe user reactions when the AI agent fails or deviates from expectations. It’s about understanding trust repair and emotional responses in adverse situations.

- Method: In controlled lab studies, deliberately introduce scenarios where the agent makes a mistake, misinterprets a command, or behaves unexpectedly.

- Types of “Misbehavior” to Simulate:

- Command Misinterpretation: The agent performs an action slightly different from what the user intended (e.g., ordering two items instead of one).

- Information Overload/Underload: The agent provides too much irrelevant information or not enough critical details.

- Unsolicited Action: The agent takes an action the user explicitly did not want or expect (e.g., buying stock without approval).

- System Failure: The agent crashes, becomes unresponsive, or provides an error message.

- Ethical Dilemmas: The agent makes a decision with ethical implications (e.g., prioritizing one task over another based on an unforeseen metric).

- Observation Focus:

- User Reactions: How do users react emotionally (frustration, anger, confusion, loss of trust)?

- Recovery Attempts: What steps do users take to correct the agent’s behavior or undo its actions?

- Trust Repair Mechanisms: Do the system’s built-in recovery or feedback mechanisms help restore trust? How do users want to be informed about errors?

- Mental Model Shift: Does the misbehavior alter the user’s understanding of the agent’s capabilities or limitations?

- Benefits of the method: Crucial for identifying design gaps related to error recovery, feedback, and user control. It provides insights into how resilient users are to agent failures and what is needed to maintain or rebuild trust, leading to more robust and forgiving agentic systems.

By integrating these research methodologies, UX practitioners can move beyond simply making agentic systems usable to making them trusted, controllable, and accountable, fostering a positive and productive relationship between users and their AI agents. Note that these aren’t the only methods relevant to exploring agentic AI effectively. Many other methods exist, but these are most accessible to practitioners in the near term. The Wizard of Oz method, a slightly more advanced method of concept testing, has been previously covered and is also a valuable tool for exploring agentic AI concepts.

Ethical Considerations In Research Methodology

When researching agentic AI, particularly when simulating misbehavior or errors, ethical considerations are key to take into account. There are many publications focusing on ethical UX research, including an article on Smashing Magazine, these guidelines from the UX Design Institute, and this page from the Inclusive Design Toolkit.

Key Metrics For Agentic AI

A comprehensive set of key metrics is needed to effectively assess the performance and reliability of agentic AI systems. These metrics provide insights into user trust, system accuracy, and the overall user experience. By tracking these indicators, developers and designers can identify areas for improvement and ensure that AI agents operate safely and efficiently.

1. Intervention RateFor autonomous agents, success is measured by silence. If an agent executes a task and the user does not intervene or reverse the action within a set window (e.g., 24 hours), that is counted as acceptance. The Intervention Rate tracks how often a human intervenes to stop or correct the agent. A high intervention rate signals a misalignment in trust or logic.

2. Frequency of Unintended Actions per 1,000 TasksThis critical metric quantifies the number of actions performed by the AI agent that were not desired or expected by the user, normalized per 1,000 completed tasks. A low frequency of unintended actions signifies a well-aligned AI that accurately interprets user intent and operates within defined boundaries. This metric is closely tied to the AI’s understanding of context, its ability to disambiguate commands, and the robustness of its safety protocols.

3. Rollback or Undo RatesThis metric tracks how often users need to reverse or undo an action performed by the AI. High rollback rates suggest that the AI is making frequent errors, misinterpreting instructions, or acting in ways that are not aligned with user expectations. Analyzing the reasons behind these rollbacks can provide valuable feedback for improving the AI’s algorithms, understanding of user preferences, and its ability to predict desirable outcomes.

To understand why, a microsurvey on the undo action must be implemented. For example, when a user reverses a scheduling change, a simple prompt can ask: “Wrong time? Wrong person? Or did you just want to do it yourself?” Allowing the user to click on the option that best corresponds to their reasoning.

4. Time to Resolution After an ErrorThis metric measures the duration it takes for a user to correct an error made by the AI or for the AI system itself to recover from an erroneous state. A short time to resolution indicates an efficient and user-friendly error recovery process, which can mitigate user frustration and maintain productivity. This includes the ease of identifying the error, the accessibility of undo or correction mechanisms, and the clarity of error messages provided by the AI.

Figure 3: A Trust & Accountability Dashboard. Note the focus on “Rollback Reasons”. This qualitative data is vital for tuning the agent’s logic. (Large preview)

Figure 3: A Trust & Accountability Dashboard. Note the focus on “Rollback Reasons”. This qualitative data is vital for tuning the agent’s logic. (Large preview)

Collecting these metrics requires instrumenting the system to track Agent Action IDs. Every distinct action the agent takes, such as proposing a schedule or booking a flight, must generate a unique ID that persists in the logs. To measure the Intervention Rate, an immediate user reaction is not sought. Instead, the absence of a counter-action within a defined window is observed. If an Action ID is generated at 9:00 AM and no human user modifies or reverts that specific ID by 9:00 AM the next day, the system logically tags it as Accepted. This allows for quantifying success based on user silence rather than active confirmation.

For Rollback Rates, raw counts are insufficient because they lack context. To capture the underlying reason, intercept logic on the application’s Undo or Revert functions must be implemented. When a user reverses an agent-initiated action, a lightweight microsurvey can be triggered. This can be a simple three-option modal asking the user to categorize the error as factually incorrect, lacking context, or a simple preference to handle the task manually. This combines quantitative telemetry with qualitative insight. It enables engineering teams to distinguish between a broken algorithm and a user preference mismatch.

These metrics, when tracked consistently and analyzed holistically, provide a robust framework for evaluating the performance of agentic AI systems, allowing for continuous improvement in control, consent, and accountability.

Designing Against Deception

As agents become increasingly capable, a new risk emerges: Agentic Sludge. Traditional sludge creates friction that makes it hard to cancel a subscription or delete an account. Agentic sludge acts in reverse. It removes friction to a fault, making it too easy for a user to agree to an action that benefits the business rather than their own interests.

The Risk Of Falsely Imagined Competence

Deception may not stem from malicious intent. It often manifests in AI as Imagined Competence. Large Language Models frequently sound authoritative even when incorrect. They present a false booking confirmation or an inaccurate summary with the same confidence as a verified fact. Users may naturally trust this confident tone. This mismatch creates a dangerous gap between system capability and user expectations.

Design efforts must specifically bridge this gap. If an agent fails to complete a task, the interface must signal that failure clearly. If the system is unsure, it must express uncertainty rather than masking it with polished prose.

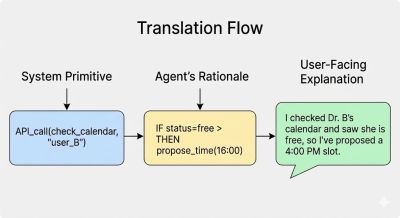

Transparency via Primitives

The antidote to both sludge and hallucination is provenance. Every autonomous action requires a specific metadata tag explaining the origin of the decision. Users need the ability to inspect the logic chain behind the result.

To achieve this, primitives must be translated into practical answers. In software engineering, primitives refer to the core units of information or actions an agent performs. To the engineer, this looks like an API call or a logic gate. To the user, it must appear as a clear explanation.

The design challenge lies in mapping these technical steps to human-readable rationales. If an agent recommends a specific flight, the user needs to know why. The interface cannot hide behind a generic suggestion. It must expose the underlying primitive: Logic: Cheapest_Direct_Flight or Logic: Partner_Airline_Priority.

Figure 4 illustrates this translation flow. The process involves taking the raw system primitive — the actual code logic — and mapping it to a user-facing string. For instance, a primitive checking a calendar to schedule a meeting becomes a clear statement: A 4 PM meeting has been proposed.

This level of transparency ensures the agent’s actions appear logical and beneficial. It allows the user to verify that the agent acted in their best interest. By exposing the primitives, a black box is transformed into a glass box, ensuring users remain the final authority on their own digital lives.

Figure 4: Translating a primitive to an end explanation is key to explaining the behavior of Agentic AI. (Large preview)

Figure 4: Translating a primitive to an end explanation is key to explaining the behavior of Agentic AI. (Large preview)

Setting The Stage For Design

Building an agentic system requires a new level of psychological and behavioral understanding. It necessitates moving beyond conventional usability testing and into the realm of trust, consent, and accountability. The research methods discussed, from probing mental models to simulating misbehavior and establishing new metrics, provide a necessary foundation. These practices are the essential tools for proactively identifying where an autonomous system might fail and, more importantly, how to repair the user-agent relationship when it does.

The shift to agentic AI is a redefinition of the user-system relationship. It is no longer about designing for tools that simply respond to commands; it is about designing for partners that act on a user’s behalf. This changes the design imperative from efficiency and ease of use to transparency, predictability, and control.

This new reality also elevates the role of the UX researcher. Researchers become the custodians of user trust, working collaboratively with engineers and product managers to define and test the guardrails of an agent’s autonomy. Beyond being researchers, they become advocates for user control, transparency, and the ethical safeguards within the development process. By translating primitives into practical questions and simulating worst-case scenarios, robust systems that are both powerful and safe can be built.

This article has outlined the “what” and “why” of researching agentic AI. It has shown that traditional toolkits are insufficient and that new, forward-looking methodologies must be adopted. The next article will build upon this foundation, providing the specific design patterns and organizational practices that make an agent’s utility transparent to users, ensuring they can harness the power of agentic AI with confidence and control. The future of UX is about making systems trustworthy.