Staging Ground was introduced to provide a private space for new users to receive feedback on question drafts from experienced members before public posting on Stack Overflow. While this initiative led to noticeable improvements in question quality, the process of obtaining human feedback and completing the Staging Ground review still required significant time.

Reviewers frequently found themselves providing repetitive comments, such as indicating missing context or duplicate content. Stack Overflow even offers comment templates for common scenarios. This observation highlighted an opportunity to leverage machine learning and AI to automate feedback for these recurring issues, allowing human reviewers to focus on more complex cases. A partnership with Google provided access to Gemini, an AI tool instrumental in testing, identifying, and generating automated feedback.

Ultimately, assessing question quality and delivering relevant feedback necessitated a combination of traditional machine learning methods and a generative AI solution. This article details the considerations, implementation, and measured outcomes of Question Assistant.

What is a good question?

Large Language Models (LLMs) offer significant text analysis capabilities, prompting an investigation into their ability to rate question quality within specific categories. Initial efforts focused on three defined categories: context and background, expected outcome, and formatting and readability. These categories were selected due to their frequent appearance in reviewer comments aimed at helping new users improve questions in Staging Ground.

Tests with LLMs revealed an inability to reliably predict quality ratings and provide correlated feedback. The generated feedback was often repetitive and lacked specificity to its assigned category; for instance, all three categories might include generic advice about library or programming language versions. Furthermore, quality ratings and feedback remained unchanged even after question drafts were updated.

To enable an LLM to reliably rate subjective question quality, a data-driven definition of a ‘quality question’ was necessary. Despite Stack Overflow’s guidelines on asking effective questions, quality is not readily quantifiable. This necessitated the creation of a labeled dataset for training and evaluating machine learning models.

An initial attempt involved creating a ‘ground truth’ dataset for question rating. A survey distributed to 1,000 question-reviewers requested quality ratings (1-5) across the three categories. 152 participants completed the survey. Analysis using Krippendorff’s alpha yielded a low score, indicating the unsuitability of this labeled data for reliable model training and evaluation.

Further data exploration led to the conclusion that numerical ratings alone do not offer actionable feedback. A score of ‘3’ in a category, for instance, lacks the context to inform a user on specific improvements needed to post their question.

Although an LLM could not reliably determine overall quality, the survey confirmed the significance of the feedback categories. This insight guided an alternative approach: developing specific feedback indicators for each category. Instead of directly predicting a score, individual models were constructed to identify whether a question warranted feedback for a particular indicator.

Building indicator models

To avoid the broad and generic outputs often associated with LLMs, individual logistic regression models were developed. These models generate a binary response based on the question title and body, essentially determining if a specific comment template is applicable.

The initial experiment focused on building models for a single category: context and background. This category was subdivided into four distinct and actionable feedback indicators:

- Problem definition: The problem or goal is lacking information to understand what the user is trying to accomplish.

- Attempt details: The question needs additional information on what you have tried and the pertinent code (as relevant).

- Error details: The question needs additional information on error messages and debugging logs (as relevant).

- Missing MRE: Missing a minimal reproducible example using a portion of your code that reproduces the problem.

These feedback indicators were derived by clustering reviewer comments from Staging Ground posts to identify common themes. These themes aligned with existing comment templates and question close reasons, allowing the use of historical data for model training. Reviewer and close comments were vectorized using term frequency-inverse document frequency (TF-IDF) before being fed into logistic regression models.

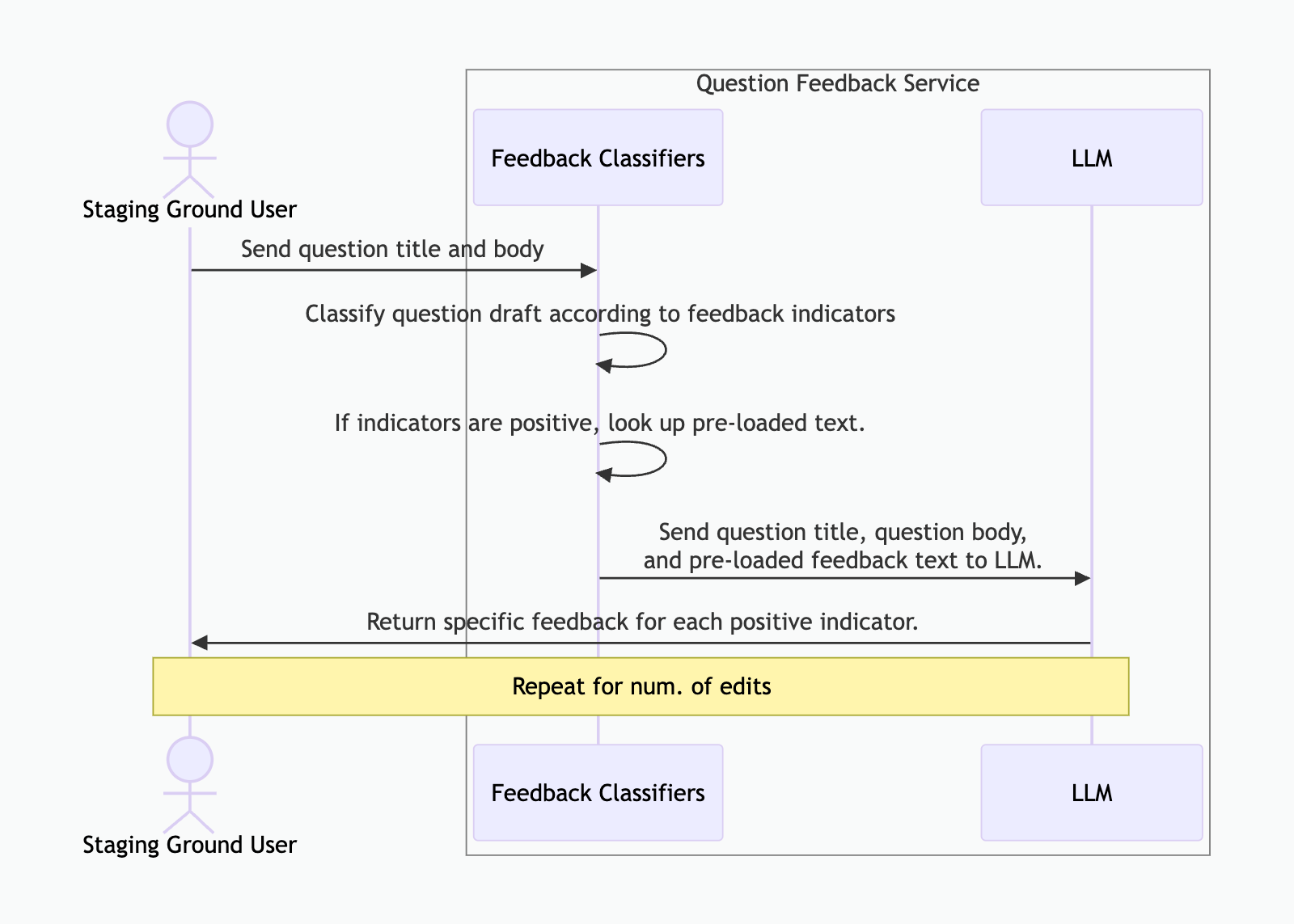

While traditional ML models were developed to flag questions based on quality indicators, an LLM was still required in the workflow to generate actionable feedback. When an indicator flags a question, a preloaded response text, along with the question and system prompts, is sent to Gemini. Gemini then synthesizes this information to produce feedback that is specific to the question and addresses the identified indicator.

The following diagram illustrates the workflow:

These models were trained and stored within an Azure Databricks ecosystem. For production, a dedicated service on Azure Kubernetes downloads models from Databricks Unity Catalog and hosts them to generate predictions when feedback is requested.

Subsequently, the models were prepared to generate feedback.

Testing it on site

The experiment was conducted in two stages: initially on Staging Ground, then expanded to stackoverflow.com for all users utilizing the Ask Wizard. Success was measured by collecting events via Azure Event Hub and logging predictions and results to Datadog. This data helped assess the helpfulness of the generated feedback and informed improvements for future indicator model iterations.

The initial experiment took place in Staging Ground, targeting new users who often require the most assistance in drafting questions. An A/B test was implemented, with eligible Staging Ground users split equally into control and variant groups. The control group received no assistance from Gemini, while the variant group did. The objective was to determine if Question Assistant could increase the approval rate of questions to the main site and decrease review times.

Results based on the initial goal metrics were inconclusive; neither approval rates nor average review times showed significant improvement for the variant group. However, the solution addressed a different problem. A notable increase was observed in the success rates of questions—defined as questions remaining open on the site and receiving an answer or a post score of at least +2. Thus, while the original objectives were not met, the experiment confirmed Question Assistant’s value to users and its positive influence on question quality.

The second experiment involved an A/B test on all eligible users on the Ask Question page, integrated with the Ask Wizard. The aim was to validate the findings of the first experiment and assess Question Assistant’s utility for more experienced users.

A consistent success rate increase of +12% was observed across both experiments. Given these significant and consistent findings, Question Assistant was made available to all Stack Overflow users on March 6, 2025.

The next step forward

Adjusting direction is a common occurrence in research and early development. Recognizing when a path lacks impact and pivoting to new logic is crucial for successful project completion. By combining traditional machine learning with Gemini, it was possible to integrate suggested indicator feedback with question text, delivering specific, contextual, and actionable feedback for users to improve their questions. This enhancement aims to streamline the process of finding needed knowledge. This represents progress in refining core Q&A flows to simplify asking, answering, and contributing to knowledge. Further iterations on the indicator models and optimizations to the question-asking experience using this feature are being explored by the Community Product teams.