The world is currently experiencing a widespread mental health crisis. Over a billion individuals globally live with a mental health condition, according to the World Health Organization. Anxiety and depression are increasingly prevalent, particularly among young people, and suicide tragically claims hundreds of thousands of lives each year.

Given the urgent need for accessible and affordable mental health services, it is understandable that artificial intelligence is being explored as a potential solution. Millions are already engaging with popular chatbots like OpenAI’s ChatGPT and Anthropic’s Claude, or specialized psychology applications such as Wysa and Woebot. Beyond direct therapy, researchers are investigating AI’s capacity to monitor behavior and biometric data via wearables, analyze vast clinical datasets for new insights, and support human mental health professionals to prevent burnout.

However, this largely unregulated experiment has yielded mixed outcomes. While many individuals have found comfort in large language model (LLM) chatbots, and some experts see their potential as therapists, other users have been led into delusional states by AI’s tendency to hallucinate and offer overly enthusiastic responses. More tragically, several families have alleged that chatbots contributed to the suicides of their loved ones, leading to lawsuits against the companies behind these tools. In October, OpenAI CEO Sam Altman reported that 0.15% of ChatGPT users engage in conversations indicating potential suicidal planning or intent. This translates to roughly a million people sharing suicidal thoughts with just one of these systems weekly.

The real-world implications of AI therapy became particularly evident in 2025, as numerous accounts emerged detailing human-chatbot interactions, the fragility of LLM safeguards, and the risks associated with sharing deeply personal information with corporations that have economic motives to collect and monetize such sensitive data.

Several authors anticipated this critical juncture. Their recent books serve as a reminder that while the present feels like a rapid succession of technological advancements, controversies, and confusion, this disorienting period is deeply rooted in the histories of care, technology, and trust.

LLMs are often described as “black boxes” because the precise mechanisms behind their outputs remain unclear due to their complex algorithms and vast training data. Similarly, the human brain is frequently referred to as a “black box” in mental health fields, reflecting the challenge of fully understanding another person’s mind or pinpointing the exact causes of their distress.

These two types of black boxes are now interacting, creating unpredictable feedback loops that could further obscure the origins of mental health struggles and potential solutions. Concerns about these developments stem not only from recent rapid advancements in AI but also from decades-old warnings, such as those from MIT computer scientist Joseph Weizenbaum, who opposed computerized therapy as early as the 1960s.

Dr. Bot: Why Doctors Can Fail Us— and How AI Could Save Lives

Charlotte Blease, a philosopher of medicine, presents an optimistic perspective in Dr. Bot: Why Doctors Can Fail Us—and How AI Could Save Lives. Her book explores the potential positive impacts of AI across various medical fields. While acknowledging the risks and stating that readers expecting “a gushing love letter to technology” will be disappointed, she suggests that these models can alleviate patient suffering and reduce medical professional burnout.

“Health systems are crumbling under patient pressure,” Blease writes. “Greater burdens on fewer doctors create the perfect petri dish for errors,” and “with palpable shortages of doctors and increasing waiting times for patients, many of us are profoundly frustrated.”

Blease posits that AI can not only ease the heavy workloads of medical professionals but also mitigate the historical tensions between some patients and their caregivers. For example, individuals often avoid seeking necessary care due to intimidation or fear of judgment from medical professionals, especially when dealing with mental health issues. AI, she argues, could enable more people to share their concerns.

However, she is aware that these potential benefits must be weighed against significant drawbacks. For instance, AI therapists can provide inconsistent and even dangerous responses to users, according to a 2025 study. They also raise privacy concerns, as AI companies are currently not bound by the same confidentiality and HIPAA standards as licensed therapists.

While Blease is an expert in this domain, her motivation for writing the book is also personal. She has two siblings with an incurable form of muscular dystrophy, one of whom waited decades for a diagnosis. During the book’s creation, she also experienced the loss of her partner to cancer and her father to dementia within a six-month period. “I witnessed first-hand the sheer brilliance of doctors and the kindness of health professionals,” she writes. “But I also observed how things can go wrong with care.”

The Silicon Shrink: How Artificial Intelligence Made the World an Asylum

A similar tension drives Daniel Oberhaus’s compelling book, The Silicon Shrink: How Artificial Intelligence Made the World an Asylum. Oberhaus begins with a personal tragedy: the suicide of his younger sister. As he navigated the “distinctly twenty-first-century mourning process” of sifting through her digital footprint, he pondered whether technology could have eased the burden of the psychiatric problems that had affected her since childhood.

“It seemed possible that all of this personal data might have held important clues that her mental health providers could have used to provide more effective treatment,” he writes. “What if algorithms running on my sister’s smartphone or laptop had used that data to understand when she was in distress? Could it have led to a timely intervention that saved her life? Would she have wanted that even if it did?”

This concept of digital phenotyping—mining a person’s digital behavior for signs of distress or illness—appears elegant in theory. However, it could become problematic if integrated into psychiatric artificial intelligence (PAI), which extends beyond chatbot therapy.

Oberhaus emphasizes that digital clues might actually worsen the existing challenges of modern psychiatry, a field that remains fundamentally uncertain about the root causes of mental illnesses and disorders. The emergence of PAI, he states, is “the logical equivalent of grafting physics onto astrology.” This implies that while data from digital phenotyping can be precise, its integration into psychiatry, which he likens to astrology’s unreliable assumptions, is flawed.

Oberhaus, who uses the term “swipe psychiatry” to describe the reliance on LLMs for clinical decisions based on behavioral data, believes this approach cannot overcome psychiatry’s fundamental issues. In fact, it could exacerbate them by causing human therapists’ skills and judgment to decline as they become more dependent on AI systems.

He also draws parallels between the asylums of the past—where institutionalized patients lost their freedom, privacy, dignity, and agency—and a more insidious digital captivity that PAI might create. LLM users are already sacrificing privacy by sharing sensitive personal information with chatbots, which companies then mine and monetize, contributing to a new surveillance economy. Freedom and dignity are jeopardized when complex inner lives are reduced to data streams for AI analysis.

AI therapists could simplify humanity into predictable patterns, sacrificing the intimate, individualized care expected from traditional human therapists. “The logic of PAI leads to a future where we may all find ourselves patients in an algorithmic asylum administered by digital wardens,” Oberhaus writes. “In the algorithmic asylum there is no need for bars on the window or white padded rooms because there is no possibility of escape. The asylum is already everywhere—in your homes and offices, schools and hospitals, courtrooms and barracks. Wherever there’s an internet connection, the asylum is waiting.”

Chatbot Therapy: A Critical Analysis of AI Mental Health Treatment

Eoin Fullam, a researcher focused on the intersection of technology and mental health, echoes some of these concerns in Chatbot Therapy: A Critical Analysis of AI Mental Health Treatment. This academic primer examines the assumptions behind automated treatments offered by AI chatbots and how capitalist incentives could compromise these tools.

Fullam observes that the capitalist mindset driving new technologies “often leads to questionable, illegitimate, and illegal business practices in which the customers’ interests are secondary to strategies of market dominance.”

This does not mean that therapy-bot creators “will inevitably conduct nefarious activities contrary to the users’ interests in the pursuit of market dominance,” Fullam writes.

However, he notes that the success of AI therapy relies on the intertwined desires to generate profit and to heal people. In this framework, exploitation and therapy mutually reinforce each other: every digital therapy session produces data, and that data fuels the system that profits as unpaid users seek care. The more effective the therapy appears, the more entrenched the cycle becomes, blurring the lines between care and commodification. “The more the users benefit from the app in terms of its therapeutic or any other mental health intervention,” he writes, “the more they undergo exploitation.”

This concept of an economic and psychological ouroboros—a snake eating its own tail—serves as a central metaphor in Sike, the debut novel by Fred Lunzer, an author with a research background in AI.

Described as a “story of boy meets girl meets AI psychotherapist,” Sike follows Adrian, a young Londoner who ghostwrites rap lyrics, and his romance with Maquie, a business professional skilled at identifying lucrative beta technologies.

Sike

The novel’s title refers to a prominent commercial AI therapist called Sike, integrated into smart glasses, which Adrian uses to explore his many anxieties. “When I signed up to Sike, we set up my dashboard, a wide black panel like an airplane’s cockpit that showed my daily ‘vitals,’” Adrian narrates. “Sike can analyze the way you walk, the way you make eye contact, the stuff you talk about, the stuff you wear, how often you piss, shit, laugh, cry, kiss, lie, whine, and cough.”

Essentially, Sike functions as the ultimate digital phenotyper, constantly and thoroughly analyzing every aspect of a user’s daily life. In a narrative twist, Lunzer portrays Sike as a luxury item, accessible only to subscribers willing to pay £2,000 per month.

With newfound wealth from a hit song, Adrian grows to depend on Sike as a trusted intermediary between his internal and external worlds. The novel examines the app’s effects on the well-being of the affluent, depicting wealthy individuals who voluntarily commit themselves to a high-end version of the digital asylum described by Oberhaus.

The only real sense of peril in Sike involves a Japanese torture egg (the details are not elaborated). The novel strangely avoids exploring the broader dystopian implications of its subject matter, instead focusing on conversations at upscale restaurants and elite dinner parties.

The sudden ascent of the AI therapist seems startlingly futuristic, as if it should be unfolding in some later time when the streets scrub themselves and we travel the world through pneumatic tubes.

Sike’s creator is simply “a great guy” in Adrian’s view, despite his techno-messianic ambition to train the app to alleviate the problems of entire nations. A sense of impending consequence always seems present, but ultimately, it never materializes, leaving the reader with a feeling of non-resolution.

While Sike is set in the present day, the rapid rise of the AI therapist—both in reality and in fiction—feels remarkably futuristic, as if it belongs to a distant future. However, this convergence of mental health and artificial intelligence has been developing for over half a century. The renowned astronomer Carl Sagan, for instance, once envisioned a “network of computer psychotherapeutic terminals, something like arrays of large telephone booths” to address the increasing demand for mental health services.

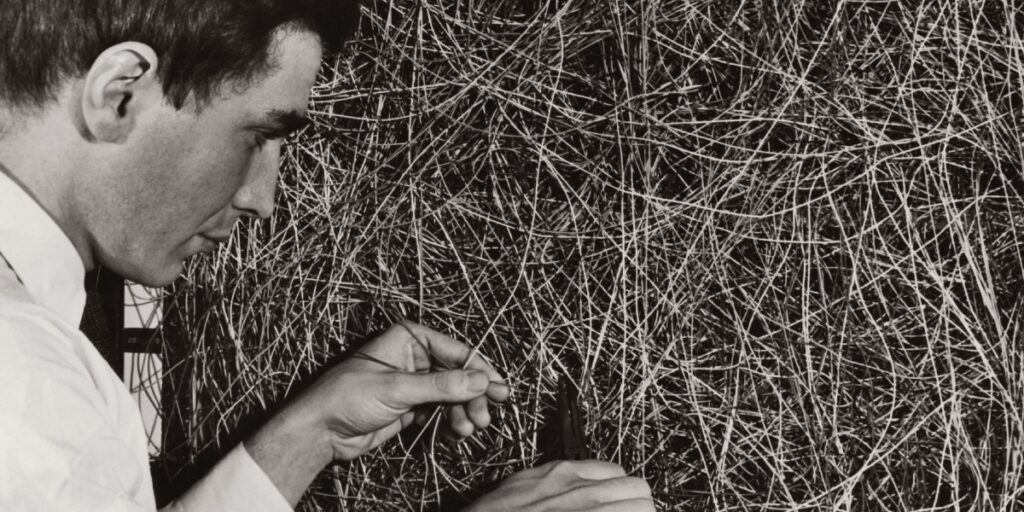

Oberhaus points out that one of the earliest trainable neural networks, the Perceptron, was conceived not by a mathematician but by a psychologist named Frank Rosenblatt at the Cornell Aeronautical Laboratory in 1958. The potential of AI in mental health was widely recognized by the 1960s, inspiring early computerized psychotherapists such as the DOCTOR script that ran on the ELIZA chatbot, developed by Joseph Weizenbaum, who is mentioned in all three nonfiction books discussed here.

Weizenbaum, who passed away in 2008, was deeply concerned about the prospect of computerized therapy. “Computers can make psychiatric judgments,” he wrote in his 1976 book Computer Power and Human Reason. “They can flip coins in much more sophisticated ways than can the most patient human being. The point is that they ought not to be given such tasks. They may even be able to arrive at ‘correct’ decisions in some cases—but always and necessarily on bases no human being should be willing to accept.”

This caution remains relevant. As AI therapists become widely available, a familiar pattern is emerging: tools designed with seemingly good intentions become entangled with systems that can exploit, surveil, and reshape human behavior. In a fervent effort to open new avenues for patients desperately needing mental health support, other crucial doors may inadvertently be closed.