Beyond a simple rebrand, Stack Internal marks a significant advancement. It aims to be a highly reliable source of knowledge for technologists and plays an increasing role in supporting the intelligence layer of enterprise AI.

Built for an AI-First Era

Stack Overflow for Teams has historically linked individuals with essential knowledge. Stack Internal advances this by evolving the platform into an enterprise knowledge intelligence layer. This layer is designed to capture, validate, and deliver reliable information directly into the systems and workflows that teams use daily.

Regardless of whether a user is a developer in an IDE, a product manager in Microsoft Teams, or a data scientist utilizing AI copilots, Stack Internal guarantees that necessary knowledge is precise, up-to-date, and accessible across all work environments.

New Features in the Stack Internal 2025.8 Release

This release introduces robust features aimed at helping organizations centralize dispersed knowledge, link AI agents with current answers, and expedite processes such as onboarding, delivery, and decision-making.

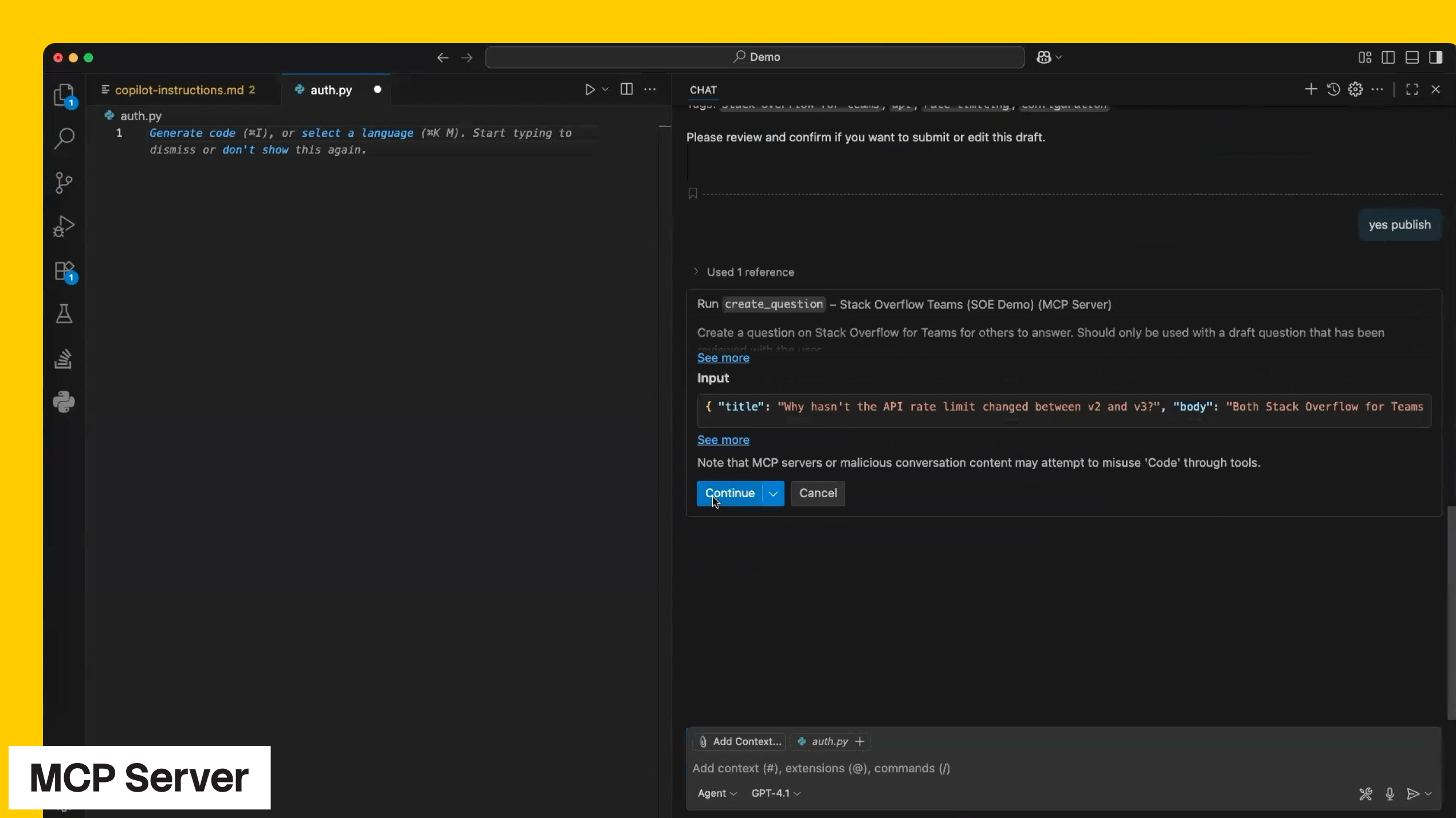

MCP Server–Free Trial Extended

The Model Context Protocol (MCP) Server establishes a direct connection between agentic AI developer tools, such as GitHub Copilot, ChatGPT, and Cursor, and an enterprise’s validated knowledge within Stack Internal.

Key capabilities of the MCP server include:

- Grounded, attributed AI responses based on real organizational knowledge

- Bi-directional flows, allowing agents to suggest updates and maintain knowledge currency

- Full privacy and control, with server deployments running inside your infrastructure

The Stack Internal MCP Server is accessible to all enterprise customers, and its free trial period has been extended. This allows organizations to leverage AI capabilities while upholding governance, accuracy, and trust. The Stack Internal MCP Server is available for a free trial.

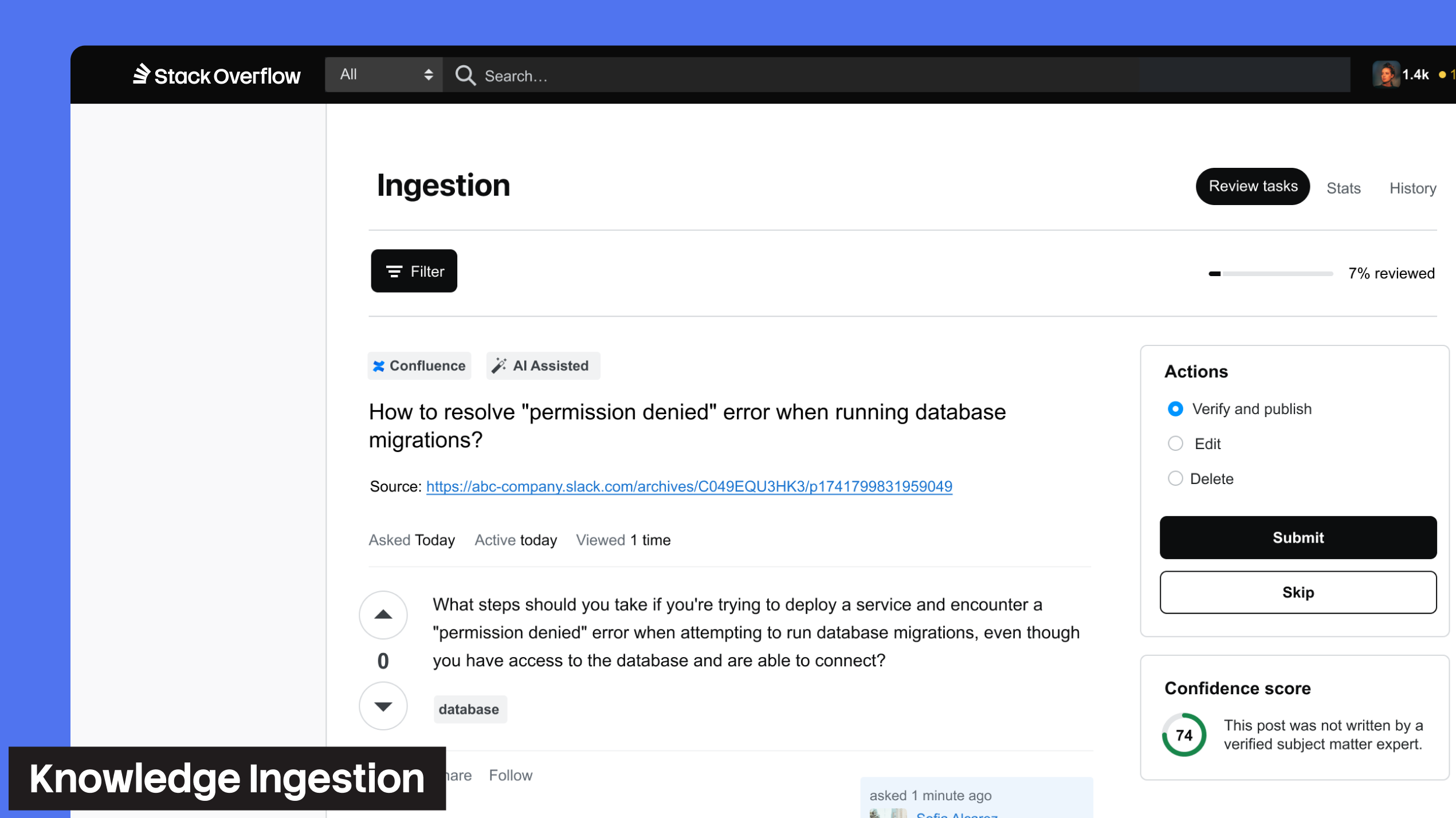

Knowledge Ingestion Pilot

A significant hurdle in enterprise knowledge management is the fragmentation of information. Knowledge Ingestion addresses this by converting existing content from various tools, such as Confluence, MS Teams, Slack, and ServiceNow, into reliable, structured knowledge within Stack Internal.

This feature employs AI-powered ingestion, confidence scoring, and a scalable human-in-the-loop validation process to guarantee that only high-quality, pertinent knowledge is published.

Through Knowledge Ingestion, teams can achieve the following:

- Seed and validate historical content with Stack Internal

- Reduce time-to-value and onboarding effort

- Create a strong foundation for AI-native workflows like search, copilots, and autonomous agents

The pilot program is currently available to a select group of enterprise customers. Interested organizations can contact their Success Manager for further details on Knowledge Ingestion use cases and its potential benefits.

The Future with Stack Internal

As organizations rapidly embrace AI, a fundamental principle persists: the efficacy of AI is directly tied to the quality of the knowledge it is built upon.

Stack Internal provides the essential groundwork for enterprise AI, ensuring accuracy, validation, and relevance to actual work processes. It captures reliable knowledge, maintains its currency, and integrates it directly into the tools teams use, including Microsoft 365, Slack, IDEs, and AI copilots. This process transforms knowledge into actionable insights, which in turn fosters a competitive advantage.

Key differentiators for Stack Internal include:

- Knowledge starts where work happens

- Knowledge is enhanced through a combination of AI and human validation

- It integrates seamlessly into the tools and agents that power contemporary work environments

This release signifies more than just a name change; it represents the dawn of a new era for enterprise knowledge. It aims to assist organizations in expanding expertise, accelerating development, and implementing AI responsibly.