Karrot is Korea’s leading local community and a service centered on all possible connections in the neighborhood. Beyond simple flea markets, it strengthens connections between neighbors, local stores, and public institutions, and creates a warm and active neighborhood as its core value.

Karrot utilizes a recommendation system to connect users with relevant interests and neighborhoods, offering personalized experiences. Customized content on the Karrot application’s home screen is continuously updated based on user activity patterns, without requiring specific interest categories. The core objective is to deliver fresh and engaging content, and Karrot consistently strives to enhance user satisfaction through this approach. The recommendation system is actively employed to provide personalized and recommended content. Within this system, the feature platform, alongside the machine learning (ML) recommendation model, plays a crucial role. The feature platform functions as a data store, holding and delivering data essential for the ML recommendation model, such as user behavior history and article information.

This two-part series begins by outlining the motivations, requirements, and solution architecture, with a focus on feature serving. Part 2 will cover the real-time and batch ingestion processes for collecting features into an online store, along with technical strategies for stable operation.

Background of the feature platform at Karrot

Karrot identified the necessity for a feature platform in early 2021, approximately two years after integrating a recommendation system into its application. At that time, the recommendation system significantly boosted various metrics. By presenting personalized feeds instead of purely chronological ones, the company observed over a 30% increase in click-through rates and improved user satisfaction. As the recommendation system’s influence grew, the ML team naturally sought to advance the system further.

In ML-driven systems, high-quality input data, such as clicks and conversion actions, are vital components, commonly referred to as features. At Karrot, data encompassing user behavior logs, action logs, and status values are categorized as user features, while article-related logs are termed article features.

To enhance the accuracy of personalized recommendations, diverse feature types are required. An efficient system capable of managing these features and quickly delivering them to ML recommendation models is essential. In this context, serving refers to the real-time data provision needed when the recommendation system suggests personalized content to users. However, the existing recommendation system’s feature management approach had several limitations, primarily:

- Dependency on flea market server – The initial recommendation system was an internal library within the flea market server, necessitating changes to the web application’s source code for every recommendation logic modification or feature addition. This reduced deployment flexibility and hindered resource optimization.

- Limited scalability of recommendation logic and features – The original system directly relied on the flea market database and only processed flea market articles. This prevented expansion to new article types, such as local community, local jobs, and advertisements, which are managed by different data sources. Additionally, feature-related code was hardcoded, making feature exploration, addition, or modification challenging.

- Lack of feature data source reliability – Features were retrieved from various repositories like Amazon Simple Storage Service (Amazon S3), Amazon ElastiCache, and Amazon Aurora. However, data quality reliability was low due to inconsistent schemas and collection pipelines, posing a significant obstacle to ensuring up-to-date and consistent features.

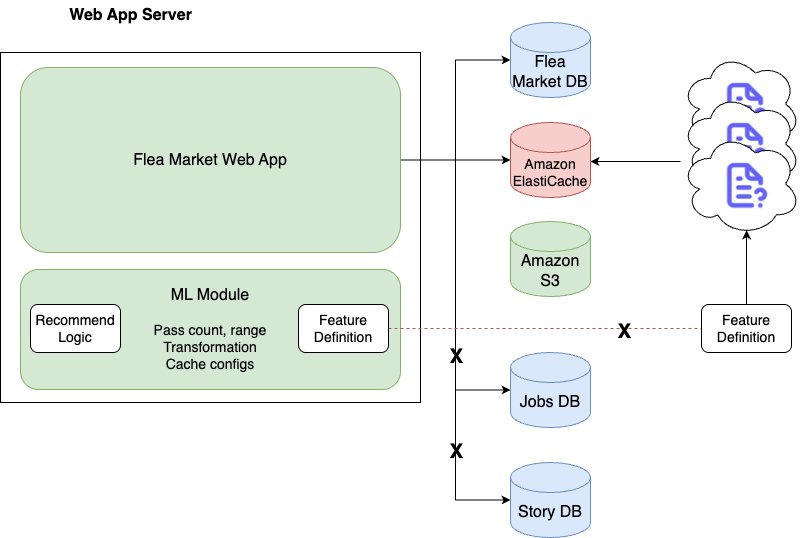

The following diagram illustrates the initial recommendation system backend structure.

To address these issues, a new central system was required to efficiently support feature management, real-time ingestion, and serving, leading to the initiation of the feature platform project.

Requirements of the feature platform

The following functional requirements were established for the feature platform as an independent service:

- Record and quickly serve the top N most recent user actions, allowing customization of both the N value and the lookup period.

- Support user-specific features, such as notification keywords, in addition to action-based features.

- Process features from various article types, extending beyond just flea market articles.

- Handle arbitrary data types for all features, including primitive types, lists, sets, and maps.

- Provide real-time updates for both action features and user characteristic features.

- Offer flexibility in feature lists, counts, and lookup periods for each request.

To fulfill these functional requirements, a new platform was essential. This platform needed three core capabilities: real-time ingestion of diverse feature types, storage with a consistent schema, and rapid response to varied query requests. While these requirements initially appeared broad, designing a generalized structure facilitated efficient configuration of data ingestion pipelines, storage methods, and serving schemas, resulting in clearer development objectives.

In addition to functional requirements, the technical requirements included:

- Serving traffic: 1,500 or more requests per second (RPS)

- Ingestion traffic: 400 or more writes per second (WPS)

- Top N values: 30–50

- Single feature size: Up to 8 KB

- Total number of features: Over 3 billion or more

At the time, the variety and quantity of features in use were limited, and recommendation models were straightforward, leading to modest technical requirements. However, anticipating rapid growth, a significant increase in system requirements was projected. Based on this forecast, higher targets were set beyond the initial requirements. As of February 2025, serving and ingestion traffic has surged by approximately 90 times compared to the initial requirements, and the total number of features has increased hundreds of times. This rapid growth was managed by the feature platform’s highly scalable architecture, which is detailed in subsequent sections.

Solution overview

The following diagram illustrates the architecture of the feature platform.

The feature platform comprises three main components: feature serving, a stream ingestion pipeline, and a batch ingestion pipeline.

Part 1 of this series focuses on feature serving. This is the core function for receiving client requests and delivering the necessary features. Karrot designed this system with four primary components:

- Server – A server that processes feature serving requests, deployed as a pod on Amazon Elastic Kubernetes Service (Amazon EKS).

- Remote cache – A shared remote cache layer for servers, utilizing Amazon ElastiCache.

- Database – A persistence layer for feature storage, using Amazon DynamoDB.

- On-demand feature server – A server that delivers features not suitable for storage in the remote cache and database due to compliance issues, or those requiring real-time calculations for each request.

From a data store perspective, feature serving must deliver high-cardinality features with low latency at scale. Karrot implemented a multi-level cache and categorized serving strategies based on feature characteristics:

- Local cache (tier 1 cache) – An in-memory store within the server, ideal for small, frequently accessed data or data requiring fast response times.

- Remote cache (tier 2 cache) – Suitable for medium-sized, frequently accessed data.

- Database (tier 3 cache) – Appropriate for large data that is not frequently accessed or is less sensitive to response times.

Schema design

The feature platform groups multiple features using the concept of feature groups, similar to column families. All feature groups are defined through a feature group schema, known as feature group specifications, with each specification outlining the feature group’s name, required features, and other attributes.

Based on this concept, the key design is defined as follows:

- Partition key: <feature_group_name>#<feature_group_id>

- Sort key: <feature_group_timestamp> or a string representing null

To illustrate this in practice, consider an example of a feature group representing recently clicked flea market articles by user 1234. Imagine the following scenario:

- Feature group name: recent_user_clicked_fleaMarketArticles

- User ID: 1234

- Click timestamp: 987654321

- Features within the feature group:

- Clicked article ID: a

- User session ID: 1111

In this example, the keys and feature group are structured as follows:

- Partition key: recent_user_clicked_fleaMarketArticles#1234

- Sort Key: 987654321

- Value: {“0”: “a”, “1”: “1111”}

Features defined in the feature group specification maintain a fixed order, which is used like an enum when saving the feature group.

Feature serving read/write flow

The feature platform employs a multi-level cache and database for feature serving, as depicted in the following diagram.

To explain this process, consider how the system retrieves feature groups 1, 2, and 3 from flea market articles. The read flow (solid lines in the diagram) demonstrates data access optimization using a multi-level cache strategy:

- Upon receiving a query request, the local cache is checked first.

- Data not found in the local cache is then searched in ElastiCache.

- If data is not in ElastiCache, it is sought in DynamoDB.

- Feature groups discovered at each stage are collected and returned as the final response.

The write flow (dotted lines in the diagram) involves the following steps:

- Feature groups that result in cache misses are stored at each cache level.

- Data not found in the local cache but present in the remote cache or database is stored in the higher-level cache.

- Data found in ElastiCache is stored in the local cache.

- Data found in DynamoDB is stored in both ElastiCache and the local cache.

- Cache write operations are performed asynchronously in the background.

This approach outlines a strategy to maintain data consistency and enhance future access times within the multi-level cache structure. Ideally, serving would operate smoothly with just this flow. However, practical challenges arose, including cache misses, consistency issues, and penetration problems:

- Cache miss problem – Frequent cache misses increase response times and burden subsequent cache levels or the database. Karrot utilizes the Probabilistic Early Expirations (PEE) technique to proactively refresh data likely to be retrieved again, thereby maintaining low latency and mitigating cache stampede.

- Cache consistency problem – Incorrectly set Time-To-Live (TTL) values for a cache can impact recommendation quality or reduce system efficiency. Karrot employs separate soft and hard TTLs and sometimes uses a write-through caching strategy to synchronize the cache and database, addressing consistency issues. Additionally, jitter is incorporated to spread out TTL deletion times, alleviating the cache stampede for feature groups written simultaneously.

- Cache penetration problem – Continuous queries for non-existent feature groups can lead to unnecessary DynamoDB queries, increasing costs and response times. The platform resolves this through negative caching, storing information about non-existent feature groups to reduce database load. The system also monitors the ratio of missing feature groups in DynamoDB, negative cache hit rates, and potential consistency problems.

Future improvements for feature serving

Karrot is considering the following enhancements for its feature serving solution:

- Large data caching – Demand for storing large data features has been increasing due to Karrot’s growth and the rising number of features. The rapid expansion of large language models (LLMs) and the associated demand for embeddings have also increased the data size to be stored. Consequently, more efficient serving methods using embedded databases are being evaluated.

- Efficient use of cache memory – Even with an initially optimal TTL value, efficiency tends to decline as user usage patterns change and models are updated. As more feature groups are defined, monitoring becomes more challenging. It should be straightforward to determine the optimal TTL value for the cache based on data. Methods to efficiently use memory while maintaining high recommendation quality through cache hit rates and preventing feature group loss are under consideration. The current feature platform attempts caching even after a single cache miss, implying that all feature groups with cache misses are deemed worth caching. This approach inherently leads to caching inefficiency. An advanced policy is needed to identify and cache only those feature groups truly worth caching based on various data, thereby increasing cache usage efficiency.

- Multi-level cache optimization – The feature platform currently has a multi-level cache structure, and complexity will increase if an embedded database is integrated in the future. Therefore, it is essential to identify and apply optimal settings across different cache levels. Future efforts will aim to maximize efficiency by considering varied cache level configurations.

Conclusion

This post detailed how Karrot developed its feature platform, focusing on its feature serving capabilities. As of February 2025, the platform reliably handles over 100,000 RPS with P99 latency under 30 milliseconds, providing stable recommendation services through a scalable architecture that efficiently manages traffic increases.

Part 2 will explore how features are generated using consistent feature schemas and ingestion pipelines through the feature platform.